Stephen Wolfram — Constructing the Computational Paradigm (#148)

Stephen Wolfram is a physicist, computer scientist and businessman. He is the founder and CEO of Wolfram Research, the creator of Mathematica and Wolfram Alpha, and the author of A New Kind of Science.

Transcript

JOE WALKER: Stephen Wolfram, welcome to the podcast.

STEPHEN WOLFRAM: Thank you.

WALKER: Stephen, I'd like to start with business and biographical stuff, and then we'll wend our way into computational science as well as its implications for history, technology and artificial intelligence.

So you're one of those rare figures who's both a brilliant scientist and a brilliant entrepreneur. And kind of like Galileo, you've both made important discoveries and created the tools necessary for making those discoveries (your version of the telescope, of course, being Mathematica and Wolfram Language). Do you view your scientific ability and your entrepreneurial ability as largely separate, or is there some common underlying factor or factors? Because not many great scientists are also great entrepreneurs and vice versa. So what is your fundamental theory of Stephen Wolfram?

WOLFRAM: Well, thinking about things and trying to understand the principles of them is something that has proven very valuable to me both in science and in life in general, and in business and so on. And so it always surprises me that people who think deeply in one area tend to not keep the thinking apparatus engaged when they're confronted with some other area. And I suppose if I have any useful skill in this, it's to keep the thinking apparatus engaged when confronted with practical problems in the world, as well as when confronted with theoretical questions in science and so on.

Mostly I see the kinds of things I do in trying to understand strategy in science and strategy in business as very much the same kind of thing.

Maybe I have one attribute that is a little bit different, which is that I'm interested in people — which is something quite useful if you're going to run companies for a long time. Because otherwise the people just drive you crazy.

But if you are actually interested in people and find the development of people to be a satisfying thing in its own right, that's something that is relevant on the business side, less relevant on the scientific side.

WALKER: Perhaps a third attribute of yours I might add to the mix is optimism.

WOLFRAM: Well, yes. Right. There's a lot that one doesn't see from the inside, so to speak. And I think it is true that when one embarks, as I have done many times in my life, on large projects, very ambitious projects, I don't see them as large or ambitious from the inside. I just see them as a thing I can do next. I don't see them as risky. I just see them as things that can be done. And yes, from the outside, it will look like lots of risk taking, lots of outrageous optimism. From the inside, it's just like "that's the path to go next".

I think for me, it's often one has optimism, but one also says, "What could possibly go wrong?" And having had experience of sort of the things that happen and so on, it is useful to me as a kind of backstop to optimism to always be also thinking about what could possibly go wrong.

And it actually probably fuels the optimism because by the time you realise the worst thing that could go wrong is this and it's not so bad, then it makes one more emboldened to go forward and try and do that next thing that seemed impossible.

WALKER: I read this anecdote about how you learned the word "yes" before you'd learned the word "no" — and that felt kind of representative of the optimism.

WOLFRAM: Yes, that's something my parents kind of would trot out from time to time as an explanation for my sort of later activities.

[6:29] WALKER: That's great. So typically the earliest one would get a PhD is the age of 25. You got yours at the age of 20. So somewhere in your education you compressed five years before the age of 20. How much of that is accounted for just by raw talent? And how much was some hack you learned that other people with less horsepower could adopt as well?

WOLFRAM: Interesting question. First hack was you can learn things just by reading books. That's very old fashioned; these days it would be going to the web or something. But the idea that if you want to learn something, you just go read books about it, you don't have to sit in a class and be told about it, so to speak, that was perhaps hack number one.

Hack number two was: you can invent your own questions. When you're trying to learn about something, yes, there are exercises in the back of the book, but there are things that you might wonder about and by golly, you can go off and explore those things. And often if you'd asked me, "Can you actually answer this question? Is this answerable question?" I would have said, "I don't know, but I'm going to try and do it."

And somebody else might have said, "You can't go and ask that question. You're a 14 year old kid and that's a question that nobody's asked before and that's not a thing one could do as a 14 year old kid." But I didn't really know that. And so I got into the habit of if I have a question that I'm curious about, I will try and figure out the answer, whether it's something that would be in the back of the book, whether it's something that's been asked before or not.

So for me, those were two important hacks.

Another one is trying to get to the point where you truly understand things. There's a level of understanding that is perfectly sufficient to get an A in the class. (Well, when I was in school, they didn't quite do grades like that, but same idea.)

But can one really get to the point where one can explain the thing to oneself and feel like one really understands it? That was a thing that I progressively really found very satisfying and got increasingly into. And once you really understand it, sort of from the foundations, it's much easier to build up a tall tower than if you kind of roughly know what's going on, but it's all a bit on sand, so to speak.

So those are perhaps three things that I figured out.

Now, I never saw myself as having that much raw talent. In retrospect, I went to top schools in England, and they ranked kids in class, and I was often the top kid and so on. So in retrospect, yes, I was, at least by the ranking systems of the time, a top operative, so to speak.

But that was not my self image. I mean, it was just like, I do the things I find interesting. Perhaps it was a good thing that I didn't say, "Oh, I'm the top kid, and therefore I can do this and that." I was just like, "I can do these things, and they're fun, and that's what I'm going to do."

For me, there's a certain drive to do things and to do things that I think are interesting regardless of the ambient feedback of "yes, that's a good thing to do, or no, that isn't a good thing to do". I suppose I'm perhaps obstinate in that respect, in that there are things I want to do and I'm going to try and figure out how to do them. And that's been a trait that I suppose I've had all my life.

I know when I was a kid, I would do projects, I would get very excited about some particular thing, and I would go and explore that thing, and then I would get to the point I wanted to get to in that thing, and I would move on to the next thing. And I'm a little bit shocked to realise that I kind of still do that now, half a century later.

WALKER: If you hadn't developed an interest in computation back in the early '80s, would Mathematica, or something like Mathematica, have been developed or how long would it have taken? So how contingent was that on you?

WOLFRAM: Well, I think that being able to have a computational assistant for doing mathematical kinds of things — there were already sort of experimental systems; I built my first system back in 1979 for doing this kind of thing — that was a thing that was bubbling forward.

The part that I think is probably more contingent is the principled structure of this kind of symbolic programming idea, the idea that you can represent things in the world in terms of symbolic expressions and transformations for symbolic expressions and so on. I think those things, in retrospect I realise, were more singular and more specific than I might have expected. I mean, in a sense when I set myself this problem back in 1979 of, "Okay, I'm going to try and build this broad computational system. What should its foundations be?"

And at the time I'd spent time doing natural science and physics and so on, and my model for how to think about that was it's like you go try to find the atoms, the quarks, what are the fundamental components from which you can build up computation? And I went back and looked at mathematical logic and understood those kinds of things and tried to learn from that, "How can I find the right primitives for thinking about computation?"

As it turned out, I was either lucky or something, that I got a pretty good idea about what those primitives should be. And I'm not sure that would have been quite something that would have happened the same way.

The precursors of that date back to things like the idea of combinators from 1920 which had existed in the world and been ignored for a really long time. That's probably a particular thing.

The other thing is the — you might call it ambition or vision — to say, "We're going to try and describe the whole world computationally." That was a thing that I steadily got into. And that was a thing that I think was not really something other people had in mind and have had in mind in the intervening years. I think it's something where perhaps it's just too big a project. It's like, can you really conceptualise a project that big? You mentioned optimism. That's probably a necessary trait if one's going to imagine that one can try to do a project that's kind of that grand, that big. When would people have decided that one could do something that big? It's not clear that happens for a really long time.

The thing that I've been interested in very recent times, looking at LLMs and AI and so on, and realising that they're showing us that there's kind of a semantic grammar of language, there's ways that language is put together to have meaning and so on. And I'm realising, well, Aristotle did a little bit on this back 2000 years ago and managed to come up with logic — and that was a pretty good idea. And we could have come up with sort of more general formalisations of the world anytime in the last couple of thousand years, but nobody got around to doing it. And I've done little pieces of that — maybe not so little, but done pieces of that — and hopefully we'll get to do more of that. But it's sort of shocking that in 2000 years, although it was something that could have been thought about, people just didn't get oriented to think about it.

And it's something where I suppose I've been fortunate in my life that I've worked on a lot of things that were things I wanted to work on which were not quite in the mainstream of what people were thinking about, and they worked out pretty well. And so that means that the next time I'm thinking, "Well, I'm going to think about something that's sort of outside the mainstream," I kind of think it's going to go okay. After you've done a few steps of that, you kind of feel that, yes, you feel a bit empowered to say, "Yeah, I'm thinking about it, I think it makes sense to me, I'm really going to do something with this," rather than, "Look, how can it possibly make sense? Look at all these other people who say it doesn't make sense or say that isn't the direction things should go in."

So I was lucky because I started doing science when I was pretty young, and I was in an area that was very active at the time — particle physics — and I was able to make a little bit of progress. And that gave me good, positive, personal feedback to get emboldened to try and do bigger, more difficult, further outside the box kinds of things.

[16:03] WALKER: Andy Matuschak, a previous guest of the podcast, and Michael Nielsen have this article called 'How Can We Develop Transformative Tools for Thought?' — "tools for thought" being tools for augmenting human intelligence. Examples include writing, language, computers, music, Mathematica. And in the essay they assert a general principle that good tools for thought arise mostly as a byproduct of doing original work on serious problems. Tools for thought tend either to be created by the people doing that work or people working very closely to them.

Just out of curiosity — and I assume you probably agree with that principle — can you think of any historical counterexamples, where someone has actually set out primarily to create a new tool for thought without being connected with an original problem?

WOLFRAM: Well, this kind of goes along with when entrepreneurs ask me how should they invent the product for their company, and the first thing I say is, "Invent a product you actually want; it's hard to invent the product for the imaginary consumer that isn't like you." And so in my own efforts, certainly the things I built as tools, I'm typically user number one. I'm the persona that I most want the tool to be able to serve.

I would say that when it comes to tools for thinking about things and the extent to which they are disembodied from... there are things where people invent abstract ideas that don't have application to the world. I mean, a famous example in mathematics is transfinite numbers which were invented, they're interesting, they have all kinds of structure, and it's been 100 and something years, and every so often I say, "Finally, I'm going to find a use for transfinite numbers." And it doesn't usually work out.

Another thing to understand is if you look at the progress of science, there are often experimental tools that get created — whether it's telescopes, microscopes, whatever. I think that the invention of the telescope — how that was plugged into things one would think about, it wasn't really. It was invented as a piece of invention for practical uses. And then the fact that it turned out to be this thing that unlocked the discoveries of the moons of Jupiter — it came after the creation of the tool.

But in terms of ways that people have of thinking about things, a big example that you mentioned is language which is our apparatus for taking the thoughts that swirl around in our brains and packaging them in such a way that we can communicate them elsewhere and even play them back to ourselves. And I think that's something which, by its very nature, emerges from the thoughts that are happening inside.

I suppose another example of this would be when it comes to things like artificial languages, where people say, "Let's invent a language that will lead us to think in certain ways."

I'm thinking through historical examples here.

There are definitely, in science, there's definitely plenty of things where the experimental tool has been invented independent of people thinking about how it will be used. Just as a matter of "well, this is the next thing we can measure" type thing, without kind of thinking, "Well, if we measure this, then it will fit into our whole framework of thinking about things."

In terms of the history of tools of thought at a more abstract level, they're not so many. I mean, you listed off many of the major ones. It's sort of interesting, if you take mathematics as an example, which is in a sense an organising tool for thinking about things, what was mathematics invented for? What were the ideas of numbers and things like that invented for?

They were invented for the practical running of cities in ancient Babylon. They were invented as a way of abstracting life to the point where it could be organised to be governed and so on.

But things like numbers, and sort of the early times of mathematics, were not invented, I think, so much as a way of extending our ability to think about things; they were invented as a sort of practical tool for taking things which were going on anyway and making them kind of more, I don't know, governable and organised or something.

So perhaps that's an example of a place where the notion of this abstraction kind of happened for very practical reasons. Now, that's why by the time we get to 1687 and Isaac Newton and his Principia Mathematica, its full title is, in English, Mathematical Principles of Natural Philosophy. So in his time, he was the one who got to make this connection between this already-built tool of thought, in a sense — of mathematics — and, in his case, things in the natural world.

So it's a good prompt for thinking about how one imagines the history of intellectual development for our species. And it's always a thing where, as we fill in a certain amount of abstraction, a certain set of principles, we get to put another level on the kind of tower of intellectual things that we can think about. Each new kind of paradigm that we invent lets us build a bit taller so we can potentially get to the next paradigm.

WALKER: So you raised the more general claim about the history of ideas, namely that technology often precedes science.

WOLFRAM: Yes.

WALKER: I'm going to take that as an opportunity for a quick digression, and then I'll come back tools for thought.

[23:23] So if it is indeed true that technology often precedes science, and in fact, in A New Kind of Science, you raise the question, "Well, why wasn't the computational paradigm stumbled upon earlier?" And the answer you give is that the technology of computing that had coalesced by the time you were looking at these problems was an important enabling factor for two reasons. Firstly, there were certain experiments that could only be done with that contemporaneous technology. And secondly, being exposed to practical computing helped you to develop your intuition about computational science.

So if that's true, does it worry you that some technology currently inconceivable to us could in future provide a basis for an even more fundamental kind of science?

WOLFRAM: Well, I'm not sure it worries me. I think that seems kind of exciting. One of the things I've come to realise from studying recent things about fundamental physics is we perceive the universe the way we perceive the universe to be, because of who we are. That is, our sensory apparatus for perceiving the world is what gives us the laws of physics that we have. So we talk about space and time and so on, and the fact that we imagine that there is a notion of a state of things in space at successive moments in time is a consequence of the fact that as we look around we see 100 metres away or something, and the time it takes light to come from 100 metres away to us is really short compared to the time it takes us to realise what we saw.

That's why we kind of imagine what happens in space everywhere at successive moments in time. We might be built differently. We might be a different physical size relative to the speed of light and so on, and we would have a different view of how the universe is put together. So I think that the way that we have of understanding science, understanding the universe, is deeply dependent on the way we are as perceivers of the universe. And as we advance, maybe have more sensory apparatus, we build more tools that allow us to sense aspects of the universe we couldn't sense before, we necessarily will start to think differently about how the universe works. And I think it's kind of a thing that goes hand in hand — both the way that we kind of expand our existence, and the things that we can perceive about how the universe works, these are going to sort of expand together.

Whether it was the telescope, the microscope, the electronic amplifier. These all led to different views of what existed in our universe that we were simply unaware of before that. And I think it is likely, in fact certain, in fact necessarily the case, that as we extend our sensory domain we will end up sampling aspects of what I call the ruliad — this kind of limit of all possible computational processes, this kind of universe of all possible universes. We'll inevitably sample more of that.

That there can be sort of different pieces of science, different pieces of the story of how the universe works, that we will get to — I find that inevitably the case.

Now have we reached the bottom of the whole thing? With the ruliad and with all these ideas about fundamental physics, are we at the end of that particular path of understanding what's it all made of?

I kind of think yes. I think we got to the bottom. Now there's a long way from the bottom to where we are, and there are undoubtedly many kinds of science that one could expect to build that live in that intervening layer between what's at the bottom... What's at the bottom is both deeply abstract and in some sense it's necessary that it works that way — but it also doesn't tell us that much. That it tells us that that is the foundation is interesting. I think it's great. But also, to be able to say things about what we could possibly sense in the world, there's layers of what we have to figure out to know that.

One of the things that comes out of this idea of computational irreducibility is this realisation that there's an infinite number of pockets of computational reducibility, an infinite number of places where we don't just have to say, "Oh, we just have to wait for the computation to take its course to know what's going to happen," where we can say, "We discovered something. We know how to jump ahead in this particular case." There's an infinite collection of those places where we can discover something that allows us to jump ahead, that allows us to make an invention, that allows us to make a new kind of scientific law or something.

That's the place where there's an endless frontier of things to do, and that's a place where there will undoubtedly be kinds of science that are developed by looking at different kinds of pockets of reducibility than the ones we have seen so far.

Maybe I'm wrong, but I think we, for better or worse, hit the bottom in terms of understanding what the ultimate machine code of how things are put together is. And in a sense, as I say, it's a very abstract, general, inevitable kind of structure. But the real richness of our experience comes in the layers that exist above that.

[29:45] WALKER: So coming back tools for thought, we were talking about how when one is designing such tools, it's important to have some kind of tangible contact with the problem that the tool is designed to solve. And one of the things I find interesting is that Mathematica's functionality has expanded over the years into domains where you don't actually have domain expertise. For example, you bundle libraries with detailed primitives for earth science modelling. I was curious what incites projects like that and how is geological domain expertise imported into Wolfram Research?

WOLFRAM: Well, one of the things that been great about my job and my life, so to speak, is that I am sort of forced to have some kind of fairly deep understanding of a very broad range of areas. You asked me about life hacks that have let me do interesting stuff I've been able to do. One of them is I've been forced to understand at a foundational level a very broad range of areas, because what I've discovered is that if you're trying to do language design, you're trying to make the best tool for people to be able to do different kinds of things, the way you have to do that is by drilling down to get to the primitives of what has to be done in that area. And that requires that you have a deep understanding of that area.

Within the company we have a very eclectic collection of people with lots of different backgrounds and we always have this internal database about who knows what, where people talk about the different things they know about. And so, okay, we need somebody who knows about geology. Alright, let's go to the "who knows what" database; there's probably somebody who knows about geology.

But beyond that, we've been lucky enough to have a very broad spectrum of top research people around the world use our tools. And so it's always been an interesting thing when we need to know about some very specialised thing, it's like, "Well, who's the world expert in this?" It's often very satisfying to discover that they've been longtime users of our technology. But then we contact them and say, "Hey, can you help us understand this?"

I have noticed — particularly in building Wolfram Alpha, which has particularly wide reach in terms of the different domains that we're dealing with — that one of the things about setting up computational knowledge there has been unless there's an expert involved in that process, you'll never get it right because there's always that extra little "Oh, but everybody in this area knows this."

It's kind of like you see things that happen in the world where like in the tech industry or something, people will be saying, "Oh, can you believe this or that happened," or, "Can you believe this company turned out to be a sham," or whatever else. It's kind of like, "Look, I'm in this industry, everybody in this industry has certain intuition about what's going on and kind of knows how this works." But if you're outside of that world, it's kind of difficult to develop that intuition.

One of the things I've I think gotten better at over the years is first of all, I know that is a thing in an area, that there's some kind of intuition, some way of thinking in that area, and I know that I don't know it if it's something I've never been exposed to. And I've kind of learnt that you have to sort of feel your way around talking to people in that area, trying to get a feeling for how people think in that area. And usually you can get to be able to do that, but you have to realise that it's the thing you have to do, and it's not self-evident how this area works, even if you know the sort of the core facts of the area.

[33:31] WALKER: That's interesting. So Wolfram research was founded in 1987. It's been a private company ever since. What are the factors that have gone into the decision to remain private? Because I think you toyed with the idea of taking it public back in '91?

WOLFRAM: Yes, that's right. Yes. So, look, people sometimes say everybody has a boss. But I don't.

And that's great, because it means that I can get to do things where I take responsibility for what I do, and often it works out and that's great, but sometimes it doesn't. And I think that the sort of freedom to do what one thinks one should do, rather than having a responsibility to other people to say, "Hey, look, you put all your money into this..." I would feel, in that case, a responsibility to the folks who put all the money in, or the public or whoever else it is, to not lose their money or whatever. It's been very nice to have the freedom to just be able to do the things that I think we should do.

It's a complicated thing because our company is about 800 people right now, and that is a size that I kind of like. I think maybe we could expand to maybe twice that. If you say, "Well, would you like a company that has 50,000 employees?" The answer is, "Not particularly." That's a ship that's a lot harder to turn.

If you have a company that has only 50 employees, that has the problem that there's a lot of single points of failure, there's a lot of things where there just isn't a structure that lets you get certain kinds of things done. And also, as the thing gets bigger, the thing I notice is it's like, okay, we could have a big sort of tentacle that does this or that thing, which I don't really know about and I don't really care about. And it's like, okay, that could be a thing, we could do that. And it's necessary for the practicalities of the world that you have things that are commercially successful, and sometimes those involve pieces that you don't personally that much care about.

But for me, I view the company as a sort of machine for turning ideas that I have into real things. And there's a certain ergonomic aspect of a certain kind of character and size of company that works well for that. And having something where a lot of pieces of it, I don't really know how they work and what they're doing, it's like, well, you can do that. It might be a commercially viable thing to do. But it's not something that intellectually and personally I find as satisfying.

Another thing that tends to happen is there are always these trends. People say, "Oh, yeah, you're a successful tech company. You should go public. You should do this, you should do that." There's some trend about how it should work.

My own point of view has been I try and think about what makes sense and I try to do what makes sense, and it often isn't what the trend is. People saying, "That's really stupid, everybody's doing this, you should be doing that." It's like, "Well..." I just try and do the things that I do. And that's worked out pretty well for me, and that's given me sort of an attitude that I should just do the things I think I should do, rather than following the "Go public." "Do an ICO," these days or a few years ago, or make up tokens or do something. They're just all these different trends. And I suppose at some level I've been a very simple-minded and conservative business person, because we just make a product that people find useful, they buy it, and that allows us to go on and make new products and improve the thing we have.

For me personally, the greatest satisfaction comes from making a great thing. There are people I know and respect where the thing they most want to do is make the most money. I don't particularly care about that. I will always choose the door that says "do the more interesting thing". Of course, one has to be practical and one only gets to go on doing interesting things if one has a viable commercial enterprise. But for me, the goal is to do the interesting things, and that's the value function that I'm applying to the things that I do.

WALKER: Where do you think the threshold is in terms of headcount, when the ship gets too difficult to turn?

WOLFRAM: That's an interesting question.

WALKER: I guess maybe it depends on the network structure of the company as well.

WOLFRAM: A little bit. It depends on what you're doing, because there are some things that just require a lot of people. What I've done in our company is automate everything.

In other words, our company, if you look at the technology we're producing it should be 10,000 people — in terms of technology produced per unit time it should be at least 10,000 people to be able to do that. But it isn't, because the product that we make is something that automates the making of things. But we very much applied that ourselves and that's been why it's been possible. So in a sense, our company is full of great people and some great AIs, in effect, that let us make things and leverage a smaller number of people to be able to do those kinds of things.

I would say that the size that we're at I can pretty much know everything that we're doing at some level. If you say, "What's the list of all the projects in the company?" Okay, it's a sort of joke at our company that there are more projects than there are people in our company. But that's some number of hundreds of projects. And I can have some idea what's going on, on all of those things.

If you get to a structure where there are actually 5000 projects going on, then that's not something where a CEO can kind of really keep all of that in mind. And that, I think, becomes a more difficult — a different — kind of enterprise to manage.

I think it also depends on an important aspect of these things is what the culture of the company is. For our company, it's been interesting. It's had multiple phases. The company has been around for 36 years now, but it's had various phases. I mean, at the beginning it was all about Mathematica, developing Mathematica — very successful product right out of the starting gate. And then I went off and spent a decade working on basic science. I mean, I was still CEOing the company, but my priority at the time was: keep the company stable, I want to go off and do this basic science. The company kind of grew up very nicely during that period of time — as in, it went from a company that was probably not so well organised to a company was quite well organised, even if it wasn't as innovative during that period of time.

Then I came back in 2002 from that, and I'm like, "Okay, now we have to really push to innovate." And by that point, the company had a pretty good stable structure. It took some effort to say, "Okay, now we're going to innovate." People were saying, "Why are we doing this? We have a good business going. We're doing the things that we've already been doing." But it took some force of will to turn the company into something where there could be innovation. And then what's developed very nicely is that people recognise that we do new things, and people recognise that the new things usually work out. And so, for example, when LLMs came on the scene, I very quickly said, "We're going to work seriously on this." And it happens to dovetail very beautifully with the technology we've spent so many decades developing, but I didn't have a lot of pushback. It wasn't like people saying, "Oh, why are we going to do this?" Et cetera, et cetera, et cetera.

I try to have a company culture in which people do think for themselves. And so I definitely get pushback, when people say, "That argument doesn't make sense." It's kind of been amusing with virtual reality and augmented reality: back a decade ago, I was like, "We should be doing this." But some of the people at the company who'd been around in the early '90s said, "You said that in the early '90s, and it turned out to be totally silly at that time." And so now we're just about to see whether people take it seriously again now. and I would say that it's kind of a mixed bag. I'm not sure how seriously I take it right now either.

But developing this kind of culture where people have anticipation of innovation, and anticipation that things change, that's important. How much that can scale to how many people, I don't really know. And what tends to happen is you both have to have people think for themselves, but you have to have some commonality of purpose and mission so that it isn't just a bunch of fiefdoms in silos doing all kinds of different things that don't fit together. And I think there's some kind of ratio of the force of will of the CEO versus the extent of independent thinking in different parts of the company. And I don't know whether we've optimised that but at least it's a thing which feels like it's working fairly well.

[43:31] WALKER: So you've been a remote CEO since '91 — and indeed much of the company is distributed. How do you think about the trade-offs involved in remote work? Because a lot of people stress the importance of physical proximity for fomenting the exchange of ideas — the proverbial water cooler conversation.

WOLFRAM: Yes, it's funny because people adapt to lots of different kinds of things in their lives, in the world and so on. I think that companies do the same thing — that is, had our company just adapted to the idea that it is distributed and people they get comfortable with brainstorming on Zoom or whatever... And that's happened for a long time. When I really knew that we turned the corner, years ago now, was when people were working in the same office and you realise that they're actually talking to each other on their computer even though they're just down the hall. And why are they doing that? Well, because it's more convenient, because they can share the screen more easily, it's an easier way to take notes and so on, it's less distracting, et cetera, et cetera. People get used to these kinds of things.

Now, the dynamics of in-person versus kind of remote... There are certain kinds of conversations I do find it more useful to have in person: they're mostly personal conversations, really.

When it's, "This is a set of ideas. They're kind of impersonal. It's all about ideas," it's okay, it works pretty well in my experience, remotely. And by the way, it has the tremendous advantage for us that there are people distributed around the world in completely different kind of personal settings, cultural settings, et cetera, and I notice that there are times when I think we have a better view of things because we do have that kind of diversity of environment, for the people. If everybody was kind of like, "We're all living in the same town, we're all kind of seeing the same things," it brings less ideas to the table. So I think that's been a really worthwhile thing.

But sometimes when it comes to understanding people, which is something that occasionally is really valuable to do, the in person thing is often useful in that regard. I mean, it's like what can you get from email versus what can you get from a phone call versus what can you get from actually seeing people in person? Now, every year we have an experiment, I suppose, in this: we have a summer school for grown ups and we have a summer research program for high school students and so on, which is in fact just starting tomorrow for this year. And that's an in person thing for altogether about 150 people or so. And it's an interesting dynamic, it's a different dynamic. I think that it's a great way to get to know people. In the three weeks of the summer school, one can get to know people much better for the fact that one is actually running into them in person.

It would take longer to get the same level of "Oh, I really understand something about this person if it was done kind of remotely in some more attenuated way. But if I look at all the things we've invented at the company and have they been invented in person conversations even when those happen? Not really. What is difficult is getting to the point where you really can have a brainstorming type conversation with people. And for some people that's more convenient when they're in person, and they're not used to it when it's a remote thing. But people get used to that. And for me, for our company, I suppose, there are certainly people where I find it easier to kind of expose ideas talking to them than other people.

And there's sort of an environment, a cultural environment, one sets up where it's kind of easier to expose ideas than otherwise. I mean, one of the dynamics for us in recent times for our software design activities, we live stream a bunch of these things and that's a whole other interesting dynamic that's worked out really well. The process, which I've always found very interesting of kind of inventing software design and so on, it gives it a certain extra gravitas or something that we're recording this people can watch it. It's kind of like the process means something as well as the end result. And actually I think that's helped us have a better process and a better feeling that we're accountable for the process as well as for the end result. It's something that I found quite helpful.

WALKER: The other, I think, valuable aspect of those livestreams — which I'll link to, there's an amazing library of them; they're incredible; and a lot of your other meetings as well around the physics project and whatnot — from the standpoint of the general public, those kind of recordings facilitate tacit knowledge communication.

WOLFRAM: Look, I think we're the only group that has either the chutzpah or the stupidity.

WALKER: To work in public.

WOLFRAM: Yeah, right. But I have to say, whether it's the humans or the AIs that pick up on this and learn how to think about things, I think this process of seeing thinking happen is very useful for people. I know that at our summer school I tend to do a live experiment for people. I actually just figured out this morning what my live experiment will be this time. And what's useful about that is people get to see we don't know what's going to happen, we're puttering away and then things usually go horribly wrong and then usually eventually it comes together in some way. The fact that you can see that happen and you can see the missteps that get made and so on, and you can kind of get a sense of sort of an intuition for how the rhythm of such a project works, that's an important thing.

Too much of, for example, education ends up just being, "Here's the way it is," not, "Well, you too can think about it." I mean, I was mentioning my early — what I was describing as an educational hack — of you can go and explore things that haven't been explored before, this idea that you can actually be in the process of thinking about things, not just, "Here's the answer, let me tell it to you," type thing.

WALKER: Okay, so of the four large projects you've done in your life —Mathematica, A New Kind of Science, Wolfram Alpha and The Physics Project — I'm going to assume that A New Kind of Science was the most difficult, correct?

WOLFRAM: It was the most personal. I had some research assistants and things, but it was really a very individual project. And most of these other ones, there are teams involved, there are other people involved. It's one of these things where the question for a project is always, "If I don't do something today, does that mean nothing happens on this project today?" And by the time there's hundreds of people working on some software development thing, even if I do nothing today, the machine is going to keep moving forward.

[51:44] WALKER: I have a bunch of specific questions about A New Kind of Science. Firstly, I want to talk about the book from the perspective of treating it as a project. Secondly, its impact. And then, thirdly, the content of its claims. But let's start with it as a project, because I think it's one of the most ambitious, inspiring intellectual projects I'm aware of.

Okay, a bunch of questions on this. So when you were standing on the precipice of the project in '91, did you have any idea it would take you more than ten years to complete?

WOLFRAM: No. I wouldn't have done it if I did.

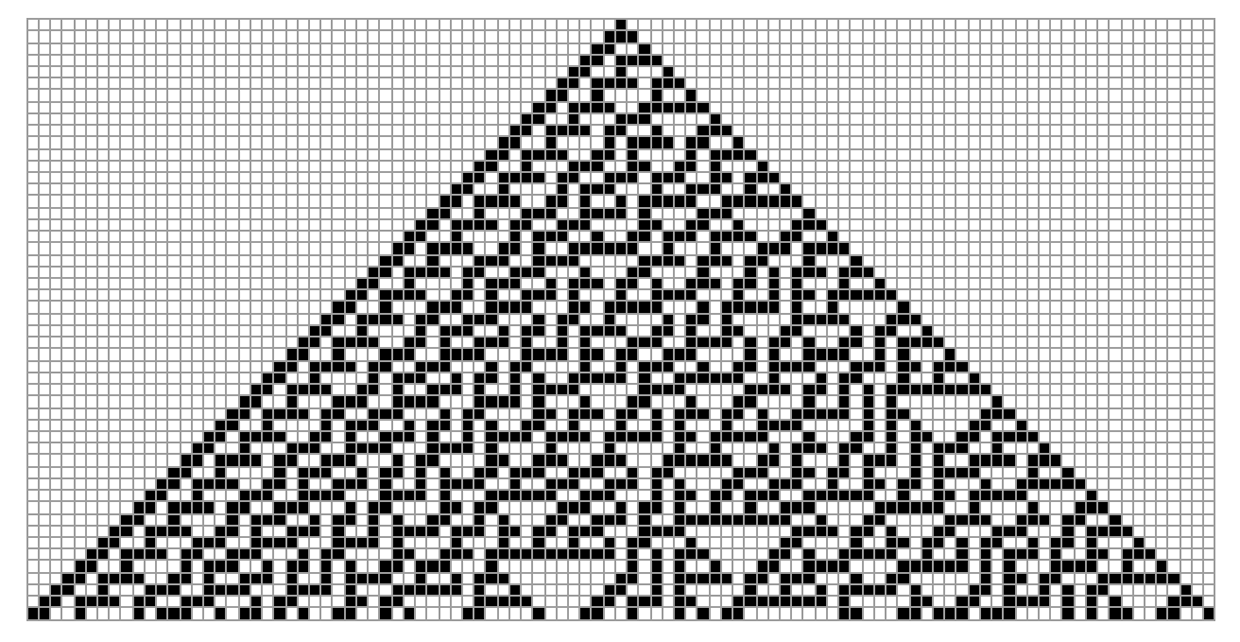

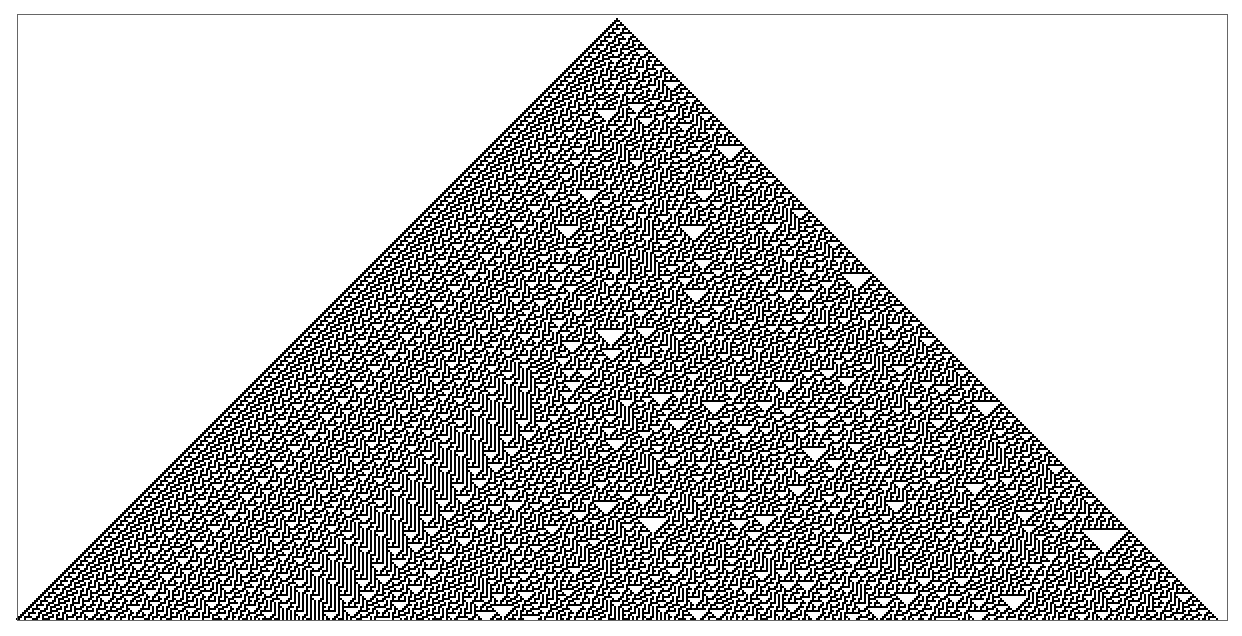

My original concept of the project — and this is often how these projects work — is I had worked on simple programs, cellular automata and things, in the 1980s. I'd been pretty pleased with how that had worked out. I kind of thought there was what I would now describe as a new kind of science to build that really focused on complexity as the thing to understand. And I tried to get that started in the mid-1980s, and I tried to do that not only as an intellectual matter but as an organisational matter as well.

It was kind of frustrating. It went really slowly. I was 26 years old or whatever; I didn't understand that the world moves more slowly than you can possibly imagine.

I went to my Plan B of: build my own environment, my own tools, and then dive in and do it myself.

I thought when I started A New Kind of Science I was mostly going to summarise what I had done in the 1980s in a well-packaged way. But I thought, "I'd better go and actually make sure I understand the foundations of this better. And there are some obvious questions to ask. Let me go ask them. Now that I have tools that let me ask these questions, I can go ask them."

First couple of years I was really studying programs other than cellular automata, what really happened with them, Turing machines, register machines, all these different kinds of things. I found it was quite quick work, actually. If the book had been just that exploration of what I would now call ruliology (the study of simple rules and what they do), then I would have been done by 1993.

But what then happened was I was like, "Well, there's low-hanging fruit to be picked in how this applies to different areas." Maybe I started at the bottom branch of the tree, but I quickly found there's much more fruit going all the way up the tree, so to speak, and just discovered a lot more than I expected to discover. I felt this almost obligation to figure this stuff out within this context.

Now, I also knew perfectly well that producing one sort of high-impact thing was going to be much more economical with my life than writing 500 papers about lots of different small pieces. I knew that matrix for where to put the things I was discovering, having a single place to put them, was a lot more efficient. That was a conscious realisation: I'm not going to write endless papers which won't fit together and somebody will have to come back years later and say, "Oh, look — all these things fit together."

I also think that the process of writing the book, it's like: I want to understand the science, how do I know that I understand it? Well, I try and really write it in as minimal a way as possible. That was my internal mechanism for getting to the place where I wanted to get to intellectually.

WALKER: Correct me if I'm wrong, but you set out the table of contents at the beginning of the project.

WOLFRAM: I did.

WALKER: Did you worry that would somehow make you too intellectually rigid?

WOLFRAM: I didn't think about that, because I thought this is an 18-month project and I know what's going to be in it.

WALKER: And it was the same table by the end, right?

WOLFRAM: Pretty much. Pretty much. I'm sure I have all the data. I just wrote this thing recently; because it was the 20th anniversary, I wrote this, as it turned out, very long and elaborate piece about the making of A New Kind of Science.

The thing that really happened was the table didn't broaden, it just deepened. So in a sense, what I was covering was the main areas of intellectual formalisation, whether it's in science, physics, biology, whatever else, mathematics. The table of contents didn't really expand.

Now, something I left out of the book was the technological implications of all of this, and I made the conscious decision I'm not going to do that as part of this project: I don't know how that's going to work out, but that's a separated piece. And I certainly did start thinking about that while I was doing the science of the project and then said I'm not going to do that.

One of the things, for me at least, is that I have many ideas. And one of the things I've learnt is that one of the very frustrating things that can happen is you have ideas but you can do nothing with them. Because it's like, yes, it's a good idea, but to implement that idea, you need this whole structure in the world that I don't happen to have. And so I tend to, as a self-preservation move, try and constrain the ideas that I think about to be ones in which I have some kind of matrix for delivering those ideas. And A New Kind of Science was a great matrix for presenting certain kinds of ideas.

So for example, right now, if I decide one day I really want to study some really cool aspect of register machines, for example, well, I could do that, it might be fun, but I really don't have a great matrix into which to put those results. So I'll tend not to do that right now. I'll tend to do things for which I have some sort of delivery mechanism, because otherwise it's just frustrating. You just build up these things that are sort of free-floating disconnected "oh, I can't even remember I did that"-type things.

One of the things very nice about the A New Kind of Science book is that I refer to it all the time and it's like, "Yeah, I think I understood that once — let me go look at the note in NKS."

WALKER: Like on a daily basis?

WOLFRAM: These days, yes.

I mean, it depends a little bit whether I'm doing science or technology. But yes, all the time. A thing for me that's important about the things I write is that I refer to them.

Particularly in recent times now, all the code and all the things I write, any picture, is click-to-copy, so it has click-to-copy code. So there's a picture and it has all these things going on and you click it and you get some Wolfram Language code, you paste it into a notebook and it runs and it makes that same picture. That's a very powerful thing. Like at our summer school, that's a thing people are using all the time to be able to build on stuff one's already done. And it's been actually a long running project to get click-to-copy code for everything in A New Kind of Science. It's slowly getting done.

But I refer to it all the time, because it's very convenient to have a condensation of a large chunk of things one's thought about. Between that and Wolfram Language, that's a pretty good chunk of things I know about (and now stuff for the Physics Project). But having that be organised is really nice. If it was scattered across a zillion academic papers, I would always be like, "I don't know where I talked about that".

[59:50] WALKER: How good are you generally at predicting how long projects will take and how many resources they'll require?

WOLFRAM: I don't know. Locally, pretty decent. But what tends to happen is it's a question of just: how well do you want to do this project?

It's funny, at our company, there's a chap who is now actually still with the company but sort of semi-retired, who joined the company very early in its history, and had had an experience of doing project management actually for building like billion dollar freeways. So it's an area where you better not get it wrong.

Anyway, he came into our company and he says, "I'm going to be able to tell you how long it's going to take to do every one of these projects that you think you're going to do." Okay? So I said, "I don't believe you." He said, "It'll take me six months, but I'll really be able to predict this pretty well." And he was right. He can predict it.

WALKER: Really?

WOLFRAM: Yes.

WALKER: Can you share what he does?

WOLFRAM: I'm not sure. I think it's a bunch of judgement.

But here's the terrible thing. Then he said, "This is going to take us two years," he'd say about something. "Let's tell the team it's going to take two years." Okay, if you tell the team it's going to take two years, it doesn't take two years anymore; it takes longer.

And so we had this big argument about: we know how long these projects are going to take, should we tell people how long they're going to take?

And the answer was, in the end, no.

WALKER: Interesting.

WOLFRAM: It's not useful. It is sometimes useful from a management point of view to know. But even sometimes from a management point of view, for the kinds of things we're doing, which are one-of-a-kind projects that have never been done before, it's often you don't really want to know, because the optimism, the vision — that's all necessary.

I suppose I've been wrong in both directions. Like the Physics Project, I had no idea that would happen as quickly as it did. And that was something where I thought we'd be picking away at little pieces for decade or two. And it turned out we got a whole collection of breakthroughs very quickly.

And I think I have more of a feeling now for the arc of intellectual history, of how long things take to kind of get absorbed in the world — and it's just shockingly long. I mean, it's depressingly long. Human life is finite. I perfectly well know that lots of things I've invented won't be absorbed until long after I'm no longer around. The timescales are 100 years, more.

It's kind of satisfying to say, "I can see what the future is like." That's cool. It's also a little frustrating because to me, one of the things, particularly as I've gotten older, that I really get a kick out of is you invent ideas, you invent things. And it's just really nice to see people get satisfaction, fulfilment, excitement out of absorbing those ideas. I mean, the ego thing of, "Oh yeah, they got my idea" for me is less important than, "It's so cool to see these people get excited about this." It's kind of like you gave them a gift, and they enjoy it.

That's a thing where it makes it a pity that the fruition is going to come 100 years from now. And it will be just pleasant to be able to see a bunch of those things.

But one of the things I would say — in technology prediction it happens as well — is I think I have a really excellent record of predicting what will happen but not when it will happen. And a classic example (my wife reminds me about this example from time to time) is back in the early '90s modifying an existing house, and we had this place we'd really like to put a television, but it's only four inches deep. And I'm like, "Don't worry, there are going to be flat screen televisions." This was beginning of the '90s, right?

Well, of course there were flat screen televisions in the end, but it took another 15 years. Why was I wrong? Well, I had seen flat screen televisions. I knew the technology of them.

What was wrong was something very subtle, which was the yield. When you make a semiconductor device, it's like you're making all these transistors and some of them don't work properly. And when you're doing that in a memory chip or something, you can route around that and it's all very straightforward. When you're doing that on a great big television, if there are some pixels that don't work, you really notice that. And so what happened was, yes, you could make these things and one in a thousand would have all those pixels working properly. But that's not good enough to have a commercially viable flat screen television. So it took a long time for those yields to get better to the point where you could have consumer flat screen televisions. That was really hard to predict.

Perhaps if I'd really known semiconductors better and really thought through "it's really going to matter if there's one defect here" and so on, I could have figured that out. But it was much easier to say, "This is how it's going to end up," than to say when it's going to happen.

Like, I'm sure one day there will be general-purpose robotics that works well and that will be the ChatGPT moment for many kinds of mechanical tasks. When will that happen? I have no idea. That it will happen I am quite sure of. You could say things about molecular computing — I'm sure they'll happen. Things about sort of medicine and life sciences — I'm sure they'll happen. I don't know when.

It's really hard to predict when. Sometimes some things, like the Physics Project, for example, good question: when would that happen? I had thought for a while that there were ideas that should converge into what became our Physics Project. The fact that happened in 2020, not in 2150 or something, is not obvious. As I look at the Physics Project, one of the things that is a very strange feeling for me is I look at all the things that could have been different that would have had that project never happen. And that project was a very remarkable collection of almost coincidences that aligned a lot of things to make that project happen. Now, the fact that that project ended up being easier than I expected was also completely unpredictable, to me at least.

But I think this point that you can't know when it will happen... It's like, "Okay, we're going to get a fundamental theory of physics." Descartes thought we were going to get a fundamental theory of physics within 100 years of his time. Turns out he was wrong.

But to know that it will happen is a different thing from knowing when it will happen, and sometimes when it will happen depends on the personal circumstances of particular individuals. For example, things like our company happened to have done really well in the time heading into the Physics Project, so I felt I could take more time to do that — and lots of silly details like that. That makes it even harder to predict when what things will happen.

And in terms of, you know, how long a project will take, there are projects where it's kind of like you know you can do it. If you say, "Write an exposition of this or that thing," like, I know I wrote an exposition of ChatGPT, I knew roughly how long that would take to do. It's an "I know I can do it" type thing.

There are other things where if you say, "Can you figure out something that's never been figured out before?" No, I don't know how long it's going to take.

[1:08:35] WALKER: Do you feel like you've gotten better at project management over time? I feel like it's one of the big underrated skillsets in the world.

WOLFRAM: Yeah. I mean, what does it take to manage a project? I mean, there's managing a project that's just you, and there's managing a project that has lots of other people in it as well.

The first step is, can you assemble the right team to do the project? And one of the things I always think is that a role of management is you've got projects, you've got people — there are these complicated puzzle pieces. How do you fit them together? And do you have your arms well enough around the project to know what it's going to take? And do you understand the people well enough to know how will this person perform doing these things for this project? So that's the first step. And yes, I think I've gotten significantly better at that.

Because it's really straightforward: I just have more experience and I just know, "I've seen a person like that before. I've seen a project like that before." I have this lexicon. It's helpful to me that there are a lot of people at our company who've worked with me for a very long time, and so something will come up and they'll say, "Oh, yeah, we had this remember this situation in 1995 where we had something like this happen?" And it's like everybody has this kind of common view of "Well, this plays out this way." It's always interesting: we have a lot of bright people who come into our company and there's people know there's a certain pattern of the kind of the young eager folk who come in and some do fantastically and some blow themselves up in some way or another. And it's kind of there's a certain pattern to that.

And the fact that there's a group of people who've all seen this is helpful, and it's often very hard to predict the details of what will happen. But yeah, I've definitely gotten better at that.

At our company, we have a pretty serious project management operation — actually started by this same guy that I mentioned who was estimating times for projects. He kind of built this kind of structure for doing project management. And there's a certain set of expectations for project managers. I think one of the things that's important is project managers have to understand their project. They don't have to be able to do every technical detail, but they have to understand the functional structure of the project. And if they don't, it's not going to work. And they have to be able to fill in the things which the people in the trenches, so to speak, they don't see far enough away to be able to notice, "Oh, this piece has to fit together with this other piece."

The thing you always notice in projects — I've done a lot of big projects and a lot often quite intense projects where like "we've got to deliver this by this time" — and one of the things I always notice is that you'll have a thing where people will be great at doing their particular silo. But the role of the overall manager ends up being: "This silo is great, that silo is great, but who's got the stuff in the middle?" And both of them say, "We're doing our job!" You have to really push hard often to get them to do the stuff that's in the middle.

A thing that really helps me in my efforts at management is I rarely manage anything where I couldn't do it myself if I really wanted to. I do not envy people who manage things which they couldn't do themselves and people who are, for example, non-technical CEOs of tech companies. That's a tough business. Because for me, if I'm in some meeting and people are saying, "Oh, it's impossible. X is impossible." It's like, "Explain it to me." People at my company know me pretty well by this point, and sometimes newer people will try and explain it to me in sort of very baby terms, and it's like, "No, just tell me the actual story. And if I don't understand what some word means, I'll ask you what it means, more or less." And then it gets very technical very quickly.

It's very nice, actually, because I used to think that me diving into sort of these very deep technical details would be dispiriting to the teams that were working on this. Because, like, "Look, the CEO could just jump in and parachute in and just do our job." I thought that would be bad for people to feel that way. Actually, quite the opposite. It's: "Hey, it's cool, the CEO actually understands what we do and has some appreciation for what we do. And by the way, okay, we didn't manage to figure this out, and he did manage to figure this out." It's like, "Well, we learnt something from that," and it's actually a good dynamic. It's not what I expected.

It is interesting to me that, oh I don't know, things like debugging complex software problems, I am always a little bit disappointed that I am better at that than one might think I would be. But it is two things: it's experience and it's keeping the thinking apparatus engaged (and it's also perhaps knowing some tools). It's a very common thing: some problem in some server thing and this, that, and the other. First of all, it's experience: "Did you look at this and this?" Maybe yes, maybe no. It's like, "Well, we can't tell what's going on. There's 100,000 log messages that are coming out." It's like, "Okay, did you write a program to analyse those log messages?" "Well, no, we looked at log messages." "Well, no," you sit down, you write a little piece of Wolfram Language code: "Hey, I'm going to do it right here." And then, "Oh, well, now we can look at the 100,000 messages and we realise there are five of them that tell us what's going on. But we'd never have noticed that if we were just doing it by hand." You end up making use of a lot of stuff from other areas to apply to this.

But this method of management where you do understand at some level the things that are going on is — again, that relates also to things like company size and so on — can you be at the point where that's going on?

And I know that for our company, there have been areas of the company which I for years never really understood, like our transaction processing systems. I never paid attention to those and they were kind of crummy, actually. And then finally, about five years ago, I got fed up because things were just too crazy. And I said, "We're going to build our own ERP transaction processing system in our own language. We're just going to build it from scratch." Which we've done. And it's a wonderful thing. We've learnt a lot from doing that. And we've managed to build something that's very good for us. It'll probably spin off as a separate company selling that to other people, too. But I was shocked at the things I didn't understand how crummy they actually were.

It's a lesson. Part of the dynamic that happens in companies is things the CEO doesn't care about, people don't put as much effort into. And so I suppose it's the "inspecting the troops" theory of things, even though that function...it isn't really that important that you check out the swords or something, but the fact that you bothered to do it is important. That's a dynamic that I certainly see. And that's a reason why it's pretty nice to be able to parachute into the details of projects and so on, because it very much communicates that, yes, you care about this stuff even though you're not spending all your time doing it. It's not like you say, "Oh, yeah, they're those guys doing DevOps or something. I don't care about DevOps."

WALKER: I've been coming around to this idea that micromanagement is underrated. But back to A New Kind of Science in the process of writing it. So you famously worked in solitude for ten years. Did that reclusive period run against your nature, or are you comfortable being a lone Wolf(ram).

WOLFRAM: Oh, I'm a gregarious person. I like people. I like learning things from people, but I'm probably not I'm not a big small talk, just hang out with people kind of person. To be fair, if you look at the ages of my children, three of them were born during the time that I was working on A New Kind of Science. So I can't claim I was a...

WALKER: Monk.

WOLFRAM: Yeah, right. And I was also running a company. So again, I wasn't completely isolated in that respect. But in terms of the process of doing the intellectual work, it was not a collective process. I mean, I had some research assistants I delegated some particular things to, but it was very much of a solo activity.

Now, in the early time of working on the project I did occasionally talk to people about it, and it was a disaster, because what happened was people would say, "Oh yeah, that thing is interesting. What about this question? What about that question?"

And then I'd think, "Well, I should think about that, I guess." And then I'd waste several days thinking about such and such a question and I'd say, "I don't really need that."

WALKER: Which they may have even suggested kind of flippantly in the first place.

WOLFRAM: Perhaps, but even if they have expertise and it was well-intentioned, in order to get a project of that magnitude done, you have to just say, "I've got a plan; I'm going to execute my plan." The distraction of other people's input and so on, I really didn't want it. I learnt actively early on in the project, if I have that input it will not get done anytime soon. And so it was much better to just close things off.

And there are several points. I mean, first of all, the act of writing things and being honest in what one's writing, is for me a very strong driver of; do you know what you're talking about? For many people it's like, "Well, let me chat with other people," and sometimes I find that useful for myself — to just chat with other people to know that I know what I'm talking about.

I mean, in my own in last few years I've been doing a lot of live-streaming and answering questions from people out in the world about things. That process has actually been quite helpful to me as I set up the camera, I'm going to be yakking for the next hour and with answering a bunch of questions and I gets me to think about a bunch of things. And this process of self-explanation, I find to be at least as valuable, if not more so than the actual interaction back and forth with people. So that was one dynamic.

Another dynamic was I'm writing code. The code doesn't lie. It does and what it does. And for me it's like, "Do I understand this? What does it actually do?" It's not like I need somebody to tell me, "Oh, that's wrong." I'm finding that out for myself because the code doesn't work, or whatever else.

So it didn't need some of the things that people think, "Oh, the socialisation will be useful," it didn't need, and it was actively a negative because of the fact that it was distracting staying on target.

[1:21:33] WALKER: Let me put an idea to you. In the general notes in the book you write about how it's crucial to be able to try out new ideas and experiment quickly. So with this idea of the importance of speed in science in mind, could you have benefited from a close collaborator in the Hardy-and-Ramanujan, Watson-and-Crick sense? I guess I have a hypothesis that pairs in science can accelerate the progress of a field in a way that a solo researcher can't and a group of three or more can't, because the pair can bounce ideas off each other.

WOLFRAM: Possibly. I mean, I don't know.

WALKER: I guess the trick is finding a partner.

WOLFRAM: That's right. I mean, in the Physics Project I had couple of people (Jonathan Gorard, Max Piskunov) who worked on the early part of that project. Particularly Jonathan's been a good person who's carried forward a lot of things. I think the fact that project got done as efficiently as it did certainly was greatly helped by those guys being around.

It's probably a terrible statement about myself, you know: I haven't had that many successful collaborations in my life. I mean, I've been happily married for 30 years or something — that's I suppose one successful kind of thing like that. Although I think my wife would say — I would say — "We never collaborate on actual projects." It's like she wants to build a house, go build the house, I'm not going to be involved.

But in any case, it's a thing where when I was younger, when I was a late teenager, whatever, doing physics and so on, I did collaborate with people and I had some great collaborators.

But I would say that a lot of the dynamic was more social and more motivational for me than it was necessary — I mean they certainly contributed plenty of things — from a pure technical execution point of view. I don't disagree that if you find the right collaborator at the right time, it's cool. And sometimes there are times when it happens for a while and then it doesn't happen anymore.

I would say that the ones you mentioned — I mean Watson and Crick, I happen to know both of those people not terribly well, but I have a little bit more personal view of that. But if you take Hardy and Ramanujan, I think it wouldn't be fair to say that was so much of a collaboration. I mean I think Ramanujan was an experimental mathematician who Hardy never really understood, and I think that was more of a Hardy as distribution channel and as kind of socialiser to the world, so to speak, and Ramanujan as kind of a person just pulling mathematics out of the experimental mind.

WALKER: Yeah interesting. I got that impression when I read your essay on Ramanujan.

WOLFRAM: Right. As I say, it's great if you can have two people moving things forward rather than one. On the other hand, finding that second person where there's a perfect fit is very challenging, and although I have known worked with many terrific people, the number of times in my life where that dynamic has really developed is very small. For the Physics Project I was lucky that Jonathan read the NKS book when he was in junior high school or something. So it's somebody where there's an intellectual alignment that was not of my making. It was kind of a thing that had independently happened.

But when you're building something new and it's like nobody's done something like that before and can you find the other person who also believes that thing is worth doing — that's a difficult thing. I think it's great if it works.

In business, for example, in my company right now, I've been the CEO from the beginning. I've never really had a business partner, to my detriment. I've been lucky enough to have lots of great people I've worked with, but I wouldn't say I've ever really had... Maybe now I maybe have some hope of having aligned that but we'll see. But being able to say, "Look, I want to do the intellectual stuff, somebody else be the business partner" type thing. And perhaps I have been both lucky and unlucky that I am competent enough at running a business that it isn't an absolute disaster not to have somebody else in there doing it. But on the other hand, I consider myself pretty good on the R&D innovation side.

I always rate myself as kind of mediocre on the running a business side. But the truth is, probably from the outside I'm much better at that than I think I am. Partly because for me most of the things that have to be done are just pure common sense. It's just: keep the thinking apparatus engaged, it'll be okay. And I know because I've advised a lot of people who have lots of tech startups and so on, I know that my "it's just common sense" thing isn't really quite right. I've been super useful as an advisor to lots of companies where people say, "Wow, you can figure all this stuff out. We couldn't figure out what to do and you can figure it out." But to me internally it's like, "Look, that stuff is pretty obvious."

Whereas a lot of things I do in science and so on, I don't think they're obvious. I think they require intellectual heavy lifting to do them. Does that mean that I'm saying that business is easier than science? I don't think it necessarily is. It's just that I don't take seriously whatever skills I might have or thinking capability I might have on the business side.

WALKER: Do you have any unique comments on the Watson and Crick partnership?

WOLFRAM: Don't think so, don't think so.

[1:29:00] WALKER: Okay. So it strikes me that A New Kind of Science as a project would almost be inconceivable to pull off within the context of academia, which is kind of a sad thought. What accounts for the incrementalism in academia?

WOLFRAM: It's big. Academia is big. In any field, when it's small, it's not as incremental. It's when it gets big, it gets necessarily institutionalised. By the time you have 20,000 people in a field, it's got to have structure. It's got to be, well, which people do you fund? Which people go in the departments? Who sets the curriculum? All this kind of thing. When it's an emerging field and there's only five people working on it, you don't need that kind of structure. And indeed, those are the times when you see the fastest progress — when some new thing emerges, it's a small number of people, it's quite entrepreneurial, some of what gets done is probably nonsense, but some of it is great and not incremental.

I think academia as a whole, the fact that it is so big is the thing that holds it back and forces it to have this really conservative — they would hate to use that term in the context of academia — but it is; it's a conservative view of what makes sense to do.

And all these different fields, they develop their value systems. Their value systems get deeply locked in, because it's the funding cycle, the publication cycle, all this kind of thing. That's how that works.

I see people who want to be more entrepreneurial. Can you be intellectually entrepreneurial and be an academic? The answer is there's only a certain amount of entrepreneurism that works. If you want to be more entrepreneurial, if you're lucky enough to be...

In a sense, this happened to me. I mean, I got to the point where I was a respectable academic, in a good kind of position, and I got to that point when I was pretty young, and so it was like, "Okay, now I can do whatever the heck I want, and now I can do things that aren't particularly incremental." Again, I was lucky because I worked in particle physics which was having its golden age in the late 1970s. And that was a time when, in a sense, there was low-hanging fruit to be picked. Incremental progress was big because the field was in this very active phase. One, having made some reasonable incremental progress, people could say, "Oh yeah, that person knows what they're doing and so they can be a physics professor or whatever," and then one can go off and do other kinds of things.

But it's rare that people end up with that kind of platform. And it's very common that they've gone through this tunnel for 15 years or 20 years, and by that point they can't really escape from that very narrow thing that they were doing.

But I think the number one thing is academia is big, and that means it has structure — and has structure that holds back the spiky stuff that gets to be really innovative. And I think that is almost to be careful what you wish for. As I think about some fields of science that I've been interested in moving forward, like this area of ruliology and so on, I think, what's that going to look like? I'm going to build a structure for doing ruliology and then the really cool stuff, it will have a definite direction — and that's a particular area which has a nice feature as some other areas have had, where just doing more stuff is useful.

So like 130 years ago or something, people doing chemistry: "Let's go study all these different chemical compounds." It just was useful to build this giant encyclopaedia of what was true about all those things.

So similarly with ruliology. There are times when incrementalism in science is useful because you need a bunch of incrementalism to build this encyclopaedia that you need to be able to make the next big conceptual leap. And I think that's not a bad thing.

The other point is that people only understand things at a certain rate. If there were major new paradigms in science being invented every year, people would find that utterly disorienting, nobody would keep track of it. It would just be a mess. In order to socialise ideas, it can't be too fast.

WALKER: Titration.

WOLFRAM: Yeah, right.

WALKER: Titrate the paradigms.

WOLFRAM: Yes, yes.

[1:34:02] WALKER: It raises the question of where in the world truly original research should be done. If it's not in universities, then, I mean, what have you got left? Corporate monopolies, or more exotic research institutions like the Institute for Advanced Study or All Souls at Oxford. Do we need new social and economic structures to support original research? Have you thought about this? Do you have any suggestions?

WOLFRAM: Yes, I have thought about this. I don't have a great answer.

WALKER: Interesting.

WOLFRAM: The Institute for Advanced Study, where I worked at one point, is a good example of a bad example in some ways.

I worked there at a time when Oppenheimer had been the director a decade and a half earlier. He was very much a people person; he picked a lot of very interesting people. And by the time I was there, many of his best bets had departed, leaving people who were the ones who he had betted on but they weren't such good bets, as it turned out.

And then there's this very strange dynamic of somebody who was in their late twenties, and it's like, "Okay, now you're set for life. Just think." Turns out that doesn't work out that well for most people. So that isn't a great solution. You might think it would be a really good solution, let's just anoint these various people — "You go think about whatever you want to think about". That turns out not to work very well. Turns out people in this disembodied "just think"-type setting, it's just a hard human situation to be in.