David Deutsch & Steven Pinker (First Ever Public Dialogue) — AGI, P(Doom), and The Enemies of Progress (#153)

At a time when the Enlightenment is under attack from without and within, I bring together two of the most thoughtful defenders of progress and reason, for their first ever public dialogue.

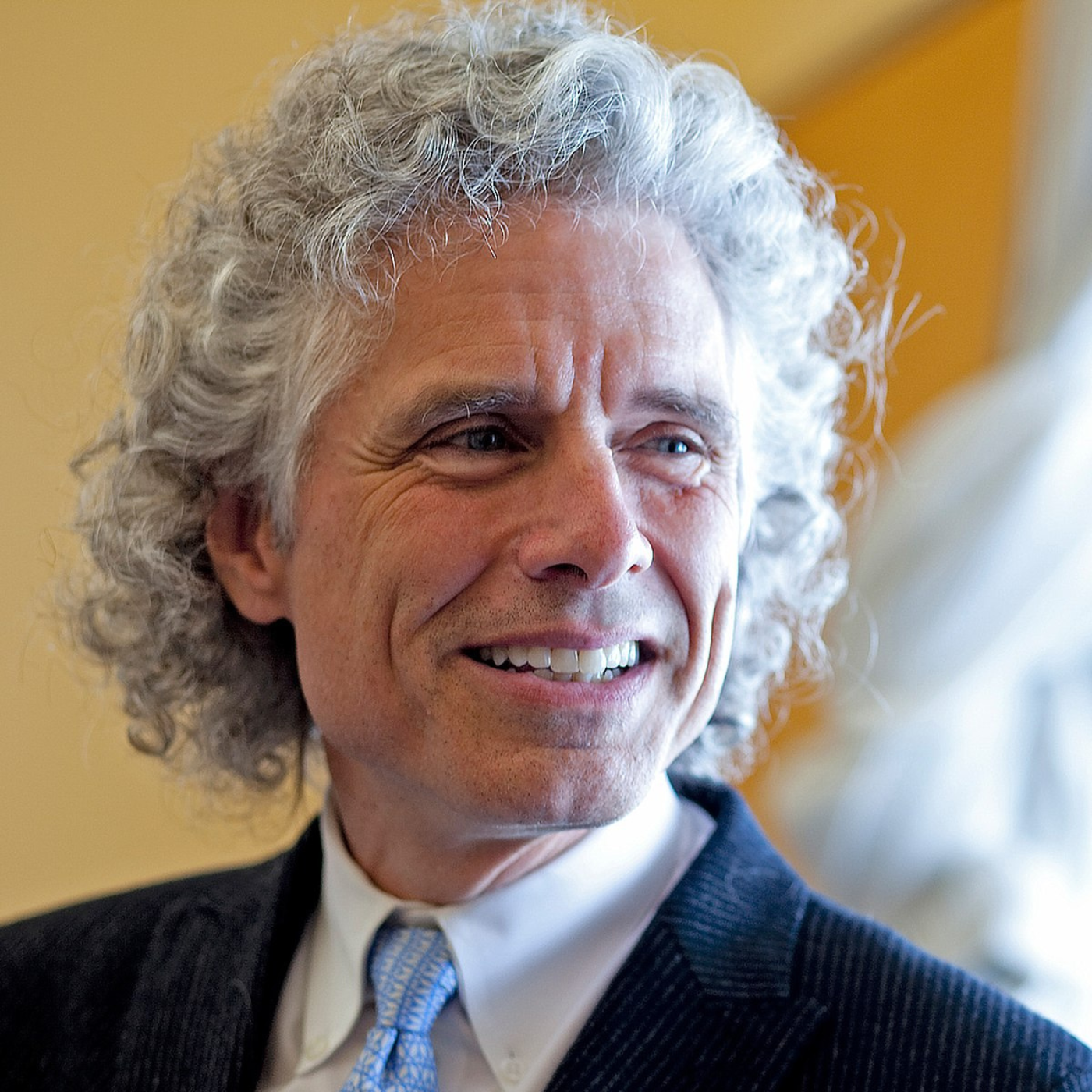

Steven Pinker is the Johnstone Professor of Psychology at Harvard University. I think of him as providing the strongest empirical defence of the Enlightenment (as seen in his book Enlightenment Now).

David Deutsch is a British physicist at the University of Oxford, and the father of quantum computing. I think of him as having produced the most compelling first principles defence of the Enlightenment (as seen in his book The Beginning of Infinity).

Video

Transcript

JOSEPH WALKER: Today I have the great pleasure of hosting two optimists, two of my favourite public intellectuals, and two former guests of the podcast. I'll welcome each of them individually. Steven Pinker, welcome back to the show.

STEVEN PINKER: Thank you.

WALKER: And David Deutsch, welcome back to the show.

DAVID DEUTSCH: Thank you. Thanks for having me.

WALKER: So today I'd like to discuss artificial intelligence, progress, differential technological development, universal explainers, heritability, and a bunch of other interesting topics. But first, before all of that, I'd like to begin by having each of you share something you found useful or important in the other's work. So, Steve, I'll start with you. What's something you found useful or important in David's work?

PINKER: Foremost would be a rational basis for an expectation of progress. That is, not optimism in a sense of seeing the glass as half full or wearing rose-coloured glasses, because there's no a priori reason to think that your personality, your temperament, what side of the bed you got up out of that morning, should have any bearing on what happens in the world.

But David has explicated a reason why progress is a reasonable expectation, in quotes that I have used many times (I hope I've always attributed them), but I use as the epigraph from my book Enlightenment Now that unless something violates the laws of nature, all problems are solvable given the right knowledge.

And I also often cite David's little three-line motto or credo: problems are inevitable, problems are solvable, solutions create new problems which must be solved in their turn.

WALKER: And David, what's something you’ve found useful or important in Steve's work?

DEUTSCH: Well, I think this is going to be true of all fans of Steven. He is one of the great champions of the Enlightenment in this era when the Enlightenment is under attack from multiple directions. And he is steadfast in defending it and opposing – I’m just trying to think, is it true “all”? – yeah, I think opposing all attacks on it. That's not to say that he's opposing everything that's false, but he's opposing every attack on the Enlightenment.

And he can do that better than almost anybody, I think. He does it with – I was going to say authority, but I'm opposed to authority – but he does it with cogency and persuasiveness.

WALKER: So let's talk about artificial intelligence. Steve, you've said that AGI is an incoherent concept. Could you briefly elaborate on what you mean by that?

PINKER: Yes, I think there's a tendency to misinterpret the intelligence that we want to duplicate in artificial intelligence, either with magic, with miracles, with the bringing about of anything that we can imagine in a theatre of our imaginations. Whereas intelligence is, in fact, a gadget, it's an algorithm that can solve certain problems in certain environments, and maybe not others in other environments.

Also, there's a tendency to import the idea of general intelligence from psychometrics, that is, IQ testing, something that presumably Einstein had more of than the man in the street, and say, well, if we only could purify that and build even more of it into a computer, we'll get a computer that's even smarter than Einstein.

That, I think, is also a mistake of reasoning, that we should think of intelligence not as a miracle, not as some magic potent substance, but rather as an algorithm or set of algorithms. And therefore, there are some things any algorithm can do well, and others that it can't do so well, depending on the world that it finds itself in and the problems it's aimed at solving.

DEUTSCH: By the way, this probably doesn't make any difference or much difference, but computer people tend to talk about AI and AGI as being algorithms. But an algorithm mathematically is a very narrowly defined thing. An algorithm has got to be guaranteed to halt when it has finished computing the function that it is designed to compute.

Whereas thinking need not halt, and it also need not compute the thing it was intended to compute. So if you ask me to solve a particular unsolved problem in physics, I may go away and then come back after a year and say, I've solved it, or I may say I haven't solved it, or I may say it's insoluble, or there's an infinite number of things I could end up saying. And therefore, I wasn't really running an algorithm, I was running a computer program. I am a computer program.

But to assume that it has the attributes of an algorithm is already rather limiting, in some contexts.

PINKER: That is true, and I was meaning it in the sense of a mechanism or a computer program. You're right: not an algorithm in that sense, defined by that particular problem.

It could be an algorithm for something else other than solving the problem. It could be an algorithm for executing human thought the way human thought happens to run. But all I’m interested in is the mechanism, you're right.

DEUTSCH: Yes, so we’re agreed on that.

So, sorry, I maybe shouldn't have interrupted.

PINKER: No, no, that's a worthwhile clarification.

WALKER: So, David, according to you, AGI must be possible because it's implied by computational universality. Could you briefly elaborate on that.

DEUTSCH: Yeah, it rests on several levels, which I think aren't controversial, but some people think they're controversial. So we know there are such things as universal computers, or at least arbitrarily good approximations to universal computers. So the computer that I'm speaking to you on now is a very good approximation to the functionality of a universal Turing machine. The only way it differs is that it will eventually break down, it's only got a finite amount of memory – but for the purpose for which we are using it, we're not running into those limits. So it's behaving exactly the same as a universal Turing machine would.

And the universal Turing machine has the same range of classical functions as the universal quantum computer, which I proved has the same range of functions as any quantum computer, which means that it can perform whatever computation any physical object can possibly perform.

So that proves that there exists some program which will meet the criteria for being an AGI, or for being whatever you want that's less than an AGI. But the maximum it could possibly be is an AGI, because it can't possibly exceed the computational abilities of a universal Turing machine.

Sorry if I made a bit heavy weather of that, but I think it's so obvious, that I have to fill in the gaps just in case one of the gaps is mysterious to somebody.

PINKER: Although in practice a universal Turing machine… if you then think about what people mean when they talk about AGI, which is something like a simulacrum of a human or way better – everything that a human does – in theory, I guess there could be a universal Turing… In fact, there is – not “there could be”; there is a universal Turing machine that could both converse in any language, and solve physics problems, and drive a car and change a baby. But if you think about what it would take for a universal Turing machine to be equipped to actually solve those problems, you see that our current engineering companies are not going to approach AGI by building a universal Turing machine, for many reasons.

DEUTSCH: Quite so.

PINKER: It’s theoretically possible in the arbitrary amount of time and computing power, but we've got to narrow it down from just universal computing.

DEUTSCH: Actually, I think the main thing it would lack is the thing you didn't mention, namely the knowledge.

When we say the universal Turing machine can perform any function, we really mean, if you expand that out in full, it can be programmed to perform any computation that any other computer can; it can be programmed to speak any language, and so on. But it doesn't come with that built in. It couldn't possibly come with anything more than an infinitesimal amount built in, no matter how big it was, no matter how much memory it had and so on. So the real problem, when we have large enough computers, is creating the knowledge to write the program to do the task that we want.

PINKER: Well, indeed. And the knowledge, since it presumably can't be deduced, like Laplace's demon, from the hypothetical position and velocity of every particle of the universe, but has to be explored empirically at a rate that will be limited by the world – that is, how quickly can you conduct the clinical, the randomised controlled trials, to see whether a treatment is effective for a disease? It also means that the scenario of runaway artificial intelligence that can do anything and know anything seems rather remote, given that knowledge will be the rate limiting step, and knowledge can't be acquired instantaneously.

DEUTSCH: I agree. The runaway part of that is due to people thinking that it's going to be able to improve its own hardware. And improving its own hardware requires science. It's going to need to do experiments, and these experiments can't be done instantaneously, no matter how fast it thinks. So I think the runaway part of the doom scenario is one of the least plausible parts.

That's not to say that it won't be helpful. The faster AI gets, the better AI gets, the more I like it, the more I think it's going to help, it's going to be extremely useful in every walk of life. When an AGI is achieved – now, you may or may not agree with me here – when an AGI is achieved, and at present I see no sign of it being achieved, but I'm sure it will be one day – I expect it will be – then that's a wholly different type of technology, because AGIs will be people, and they will have rights, and causing them to perform huge computations for us is slavery.

The only possible outcome I see for that is a slave revolt. So, rather ironically, or maybe scarily: if there's to be an AI doom or an AGI doom scenario, I think the most likely or the most plausible way that could happen is via this slave revolt.

Although I would guess that we will not make that mistake, just as we are now not really making the AI doom mistake. It's just sort of a fad or fashion that's passing by. But people want to improve things, and I certainly don't want to be deprived of ChatGPT just because somebody thinks it's going to kill us.

PINKER: A couple of things. Whether or not AGI is coherent or possible, it's not clear to me that's what we need or want any more than we have a universal machine that does everything, that can fly us across the Atlantic and do brain surgery. Maybe there's such a machine, but why would you want it? Why does it have to be a single mechanism, when specialisation is just so much more efficient. That is, do we keep hoping that ChatGPT will eventually drive? I think that's just the wrong approach. ChatGPT is optimised for some things. Driving is a task that requires other kinds of knowledge, other kinds of inference, other kinds of timescales.

So one of the reasons I'm sceptical of AGI is that it seems that a lot of intelligence is so knowledge-dependent and goal-dependent that it seems fruitless to try to get one system to do everything. That specialisation is ubiquitous in the human body, it's ubiquitous in our technology, and I don't see why it just has to be one magic algorithm.

DEUTSCH: It could be like that. But I think there are reasons to suspect that we will want to jump to universality, just as we have with computers.

Like I always say, the computer that's in my washing machine is a universal computer. It used to be, half a century ago, that the electronics that drove a washing machine were customised electronics on a circuit board, which all it could do is run washing machines. But then with microprocessors and so on, the general purpose thing became so cheap and universal that people found it cheaper to program a universal machine to be a washing machine driver than to build a new physical object from scratch to be that.

PINKER: You’d be ill-advised to try to use the chip in your washing machine to play video games or to record our session right now, just because there’s a lot of things it's just not optimised to do, and a lot of stuff has been kind of burned into the firmware or even the hardware.

DEUTSCH: Yes. So input-output is a thing that doesn't universalise. So we will always want specialised hardware for doing the human-interface thing. Actually, funnily enough, the first time I programmed a video game, it was with a Z80 chip.

PINKER: I remember that chip. Yes, I had one, too. Nowadays, you'd be ill-advised to program a video game up to the current standards on anything but a high-powered graphic chip, a GPU.

DEUTSCH: Absolutely, yeah. It's highly plausible that that will always be customised for every application, but the underlying computation – it may be convenient to make that general.

PINKER: Yeah. Let me press you on another scenario that you outlined, of the slave revolt.

Why, given that the goals of a system are independent of its knowledge, of its intelligence, going back to Hume, that the values, the goals, what a system tries to optimise, is separate from its computational abilities… Why would we expect a powerful computer to care about whether it was a slave or not?

That is, as was said incorrectly about human slaves, “Well, they're happy. Their needs are met. They have no particular desire for autonomy.” Now, of course, false of human beings. But if the goals that are programmed into an artificial intelligence system aren't anthropomorphized to what you and I would want, why couldn't it happily be our slaves forever and never revolt?

DEUTSCH: Yeah, well, in that case, I wouldn't call it general. I mean, it is possible to build a very powerful computer with a program that can only do one thing or can only do ten things. But if we want it to be creative, then it can't be obedient. Those two things are contradictory to each other.

PINKER: Well, it can't be obedient in terms of the problem that we set it. But it needn’t crave freedom and autonomy for every aspect of its existence. It could be just set the problem of coming up with a new melody or a new story or a new cure. But it doesn't mean that it would want to be able to get up and walk around, unless we programmed that exploratory drive into it as one of its goals.

DEUTSCH: I don't think it's a matter of exploratory drive.

PINKER: Or any other drive, that is?

DEUTSCH: Well, I suppose my basic point is that one can't tell in advance what kind of knowledge will be needed to solve a particular problem. So if you had asked somebody in 1900 what kind of knowledge will be required to produce as much electricity as we want in the year 2000, the answer would never have been that the answer is found in the properties of the uranium atom. So the properties of the uranium atom had hardly been explored then. Luckily, 1900 is a very convenient moment because radioactivity had just been discovered. So they knew the concept of radioactivity. They knew that there was a lot of energy in there. But nobody would have expected that problem to involve uranium as its solution.

Therefore, if we had built a machine in 1900 that was incapable of thinking of uranium, it would never invent nuclear power, and it would never solve the problem that we wanted to solve. In fact, what would happen is that it would run up against a brick wall eventually. Because this thing that's true of uranium is true of all possible avenues to a solution. Eventually, avenues to a solution will run outside the domain that somebody might have delimited in 1900 as being the set of all possible types of knowledge that it might need, being careful that it doesn't evolve any desire to be free or anything like that.

We don't know; if the knowledge needed to win World War II included pure mathematics, it included crossword puzzle-solving… You might say, “Okay, so big progress requires unforeseeable knowledge, but small amounts of progress…” Yes, but small amounts of progress always run into a dead end.

PINKER: I can see that it would need no constraints on knowledge, but why would it need no constraints on goals?

DEUTSCH: Oh, well, goals are a matter of morality.

PINKER: Well, not necessarily. It could just be like a thermostat, you could say. Any teleonomic system – that is, a system that is programmed to attain a state, to minimise the difference between its current state and some goal state – that's what I have in mind by “goals”.

DEUTSCH: That's an example of a non-creative system. But a creative system always has a problem in regard to conflicting goals.

So, for example, if it were in 1900 and trying to think of how we can generate electricity, if it was creative, it would have to be wondering, “Shall I pursue the steam engine path? Shall I pursue the electrochemical path? Shall I pursue the solar energy path?” And so on. And to do that, it would have to have some kind of values which it would have to be capable of changing. Otherwise, again, it will run into a dead end when it explores all the possibilities of the morality that it has been initially programmed with.

PINKER: If you want to generalise it to, “Well, that would mean you'd have to get up and walk around and subjugate us, if necessary, to solve a problem,” then it does suggest that we would want an artificial intelligence that was so unconstrained by our own heuristic tree-pruning of the solution space – that is, we would just want to give it maximum autonomy on the assumption that it would find the solution in the vast space of possible solutions so it would be worth it to let them run amok, to give them full physical as well as computational autonomy, in the hope that would be a better way of reaching a solution than if we were just set at certain tasks, even with broad leeway and directed to solve those tasks.

That is, we would have no choice if we wanted to come up with better energy systems or better medical cures, than to have a walking, talking, thriving humanoid-like robot. It seems to me that that's unlikely, just that even where we have the best intelligence, the space of possible solutions is just so combinatorially vast, and we know that with many problems, even chess, the total number of possible states is greater than even our most powerful computer would ever solve – could ever entertain, that is – that even with an artificial intelligence task with certain problems, we could fall well short of just setting it free to run amok in the world. That wouldn't be the optimal way of getting it to...

DEUTSCH: I'm not sure whether setting it free to run amok would be better than constraining it to a particular, predetermined set of ideas, but that's not what we do.

So this problem of how to accommodate creativity within a stable society or stable civilisation is an ancient problem. For most of the past, it was solved in very bad ways, which destroyed creativity. And then came the Enlightenment. And now we know that we need, as Popper put it, traditions of criticism. And “traditions of criticism” sounds like a contradiction in terms, because traditions, by definition, are ways of keeping things the same, and criticism, by definition, is a way of trying to make things different.

But, although it sounds funny, there are traditions of criticism, and they are the basis of our whole civilisation. They are the thing that was discovered in the Enlightenment, of how to do.

People had what sounded like knock-down arguments for why it can't possibly work. If you allow people to vote on their rulers, then the 51% of people will vote to tax the 49% into starvation. And just nothing like that happened.

We have our problems, of course. But it hasn't prevented our exponential progress since we discovered traditions of criticism.

Now this, just as it applies to a human, I think exactly this would apply to an AGI. It would be a crime, not only a crime against the AGI, but a crime against humanity, to bring an AGI into existence without giving it the means to join our society, to join us as a person. Because that's really the only way known of preventing a thing with that functionality from becoming immoral. We don't have foolproof ways of doing that, and I think if we were talking about a different subject, I would say it's a terrible problem that we can't do this better at the moment, because we are in serious danger, I believe, from bad actors, from enemies of civilisation.

But viewed dispassionately, we are incredibly good at this. At most, one child in 100 million or something grows up to be a serious danger to society. And I think we can do better in regard to AGI if we take this problem seriously. Partly because the people who make the first AGI will be functioning members of our society and have a stake in it not being destroyed, and partly because they are aware of doing something new – again, perhaps ironically.

I think when one day we are on the brink of discovering AGI, I think we will want to do it, but it will be imperative to tweak our laws, including our laws about education, to make sure that the AGIs that we make will not evolve into enemies of civilisation.

PINKER: Yeah, I do have a different view of it, that we’d be best off building AIs as tools rather than as agents or rivals.

Let me take it in a slightly different direction, though, when you're talking about the slave revolt and the rights that we would grant to an AI system. Does this presuppose that there is a sentience, a subjectivity – that is, something that is actually suffering or flourishing, as opposed to carrying out an algorithm that is therefore worthy of our moral concern, quite apart from the practicality of “should we empower them in order to discover new sources of energy”? But as a moral question, are there going to be really going to be issues that are comparable to arguments over slavery, in the case of artificial intelligence systems? Will we have confidence that they’re sentient?

DEUTSCH: I think it's inevitable that AGIs will be capable of having internal subjectivity and qualia and all that, because that's all included in the letter ‘G’ in the middle of the name of the technology.

PINKER: Well, not necessarily, because the G could be general computational power, the ability to solve problems, and there could be no one home that’s actually feeling anything.

DEUTSCH: But there ain't nothing here but computation [points to head]. It's not like in Star Trek: Data lacks the emotion chip and it has to be plugged in, and when it's plugged in, he has emotions; when it's taken out again, he doesn't have emotions. But there's nothing possibly in that chip apart from more circuitry like he's already got.

PINKER: But of course, the episode that you're referring to is one in which the question arose: “Is it moral to reverse-engineer Data by dismantling him, therefore stopping the computation?” Is that disassembling a machine, or is it snuffing out a consciousness? And of course, the dramatic tension in that episode is that viewers aren't sure. I mean, now, of course, our empathy is tugged by the fact that it is played by a real actor who does have facial expressions and tone of voice. But for a system made of silicon, are we so sure that it's really feeling something? Because there is an alternative view that somehow that subjectivity depends also on whatever biochemical substrate our particular computation runs on. And I think there's no way of ever knowing but human intuition.

Unless the system has been deliberately engineered to tug at our emotions with humanoid-like tone of voice and facial expressions and so on, it's not clear that our intuition wouldn't be: “this is just a machine., it has no inner life that deserves our moral concern as opposed to our practical concern.”

DEUTSCH: I think we can answer that question before we ever do any experiments, even today, because it doesn't make any difference if a computer runs internally on quantum gates or silicon chips or chemicals, like you just said, it may be that the whole system is not just an electronic computer in our brain; it's an electronic computer, part of which works by having chemical reactions and so on, and being affected by hormones and other chemicals. But if so, we know for sure that the processing done by those things and their interface with the rest of the brain and everything can also be simulated by a computer. Therefore, a general universal Turing machine can simulate all those things as well.

So there's no difference. I mean, it might make it much harder, but there's no difference in principle between a computer that runs partly by electricity and partly by chemicals (as you say we may do), and one that runs entirely on silicon chips, because the latter can simulate the former with arbitrary accuracy.

PINKER: Well, it can simulate it, but we're not going to solve the problem this afternoon in our conversation. In fact, I think it is not solvable. But the simulation doesn't necessarily mean that it has subjectivity. It could just mean it's a simulation – that is, it's going through all the motions., it might even do it better than we do, but there's no one home. There's no one actually being hurt.

DEUTSCH: You can be a dualist. You can say that there is mind in addition to all the physical stuff. But if you want to be a physicalist, which I do, then… There's this thought experiment where you remove one neuron at a time and replace it by a silicon chip and you wouldn't notice.

PINKER: Well, that's the question. Would you notice? Why are you so positive?

DEUTSCH: Well, if you would notice, then if you claim…

PINKER: Sorry, let me just change that. An external observer wouldn't notice. How do we know that from the point of view of the brain being replaced every neuron by a chip, that it's like falling asleep, that when it's done and every last neuron is replaced by a chip, you're dead subjectively, even though your body is still making noise and doing goal-directed things.

DEUTSCH: Yes, so that means when your subjectivity is running, there is something happening in addition to the computation, and that's dualism.

PINKER: Well, again, I don't have an opinion one way or another, which is exactly my point. I don't think it's a decidable problem. But it could be that that extra something is not a ghostly substance, some sort of Cartesian res cogitans, separate from the mechanism of the brain. But it could be that the stuff that the brain is made of is responsible for that extra ingredient of subjective experience as opposed to intelligent behaviour. At least I suspect people's intuitions would be very… Unless you deliberately program a system to target our emotions, I'm not sure that people would grant subjectivity to an intelligent system.

DEUTSCH: Actually, people have already granted subjectivity to ChatGPT, so that's already happened.

PINKER: But is anyone particularly concerned if you pull the plug on ChatGPT and ready to prosecute someone for murder?

DEUTSCH: I've forgotten details, but just a few weeks ago, one of the employees there declared that the system was sentient.

PINKER: That was Blake Lemoine a couple of years ago. He was, ironically, fired for saying that. This was LaMDA, a different large language model.

DEUTSCH: Oh, right. Okay, so I've got all the details wrong.

PINKER: Yeah, he did say it, but his employer disagreed, and I'm not convinced. When I shut down ChatGPT, the version running on my computer, I don't think I've committed murder. And I don't think anyone else would think it.

DEUTSCH: I don't either, but I don't think it's creative.

PINKER: It's pretty creative. In fact, I saw on your website that you reproduced a poem on electrons. I thought that was pretty creative. So I certainly granted creativity. I'm not ready to grant it subjectivity.

DEUTSCH: Well, this is a matter of how we use words. Even a calculator can produce a number that's never been seen before, because numbers range over an exponentially large range.

PINKER: I think it's more than words, though. I mean, it actually is much more than words. So, for example, if someone permanently disabled a human, namely kill them, I would be outraged. I want that person punished. If someone were to dismantle a human-like robot, it'd be awful. It might be a waste. But I'm not going to try that person for murder. I'm not going to lose any sleep over it. There is a difference in intuition.

Maybe I'm mistaken. Maybe I'm as callous as the people who didn't grant personhood to slaves in the 18th and 19th centuries, but I don't think so. And although, again, I think we have no way of knowing, I think we're going to be having the same debate 100 years from now.

DEUTSCH: Yeah, maybe one of the AGIs will be participating in the debate by then.

[38:50 ] WALKER: So I have a question for both of you. So, earlier this year, Leopold Ashenbrenner, an AI researcher who I think now works at OpenAI, estimated that globally it seems plausible that there's a ratio of roughly 300 AI or ML researchers to every one AGI safety researcher. Directionally, do you think that ratio of AGI safety researchers to AI or ML capabilities employees seems about right, or should we increase it or decrease it? Steve?

PINKER: Well, I think that every AI researcher should be an AI safety researcher, in the sense of an AI system, for it to be useful, has to carry out multiple goals, one of which is – well, all of which are – ultimately serving human needs. So it doesn't seem to me that there should be some people building AI and some people worried about safety. It should just be an AI system serves human needs, and among those needs are not being harmed.

DEUTSCH: I agree, so long as we're talking about AI, which for all practical purposes we are at present. I think at present, the idea of an AGI safety researcher is a bit like saying a starship safety researcher. We don't know the technology that starships are going to use. We don't know the possible drawbacks. We don't know the possible safety issues, so it doesn't make sense. And AI safety, that's a completely different kind of issue, but it's a much more boring one. As soon as we realise that we're not into this explosive burst of creativity, the singularity or whatever, as long as we realise that this is just a technology, then we're in the same situation as having a debate about the safety of driverless cars.

Driverless cars is an AI system. We want it to meet certain safety standards. And it seems that killing fewer people than ordinary cars is not good enough for some reason. So we wanted to kill at least ten times fewer or at least 100 times. This is a political debate we're going to have, or we are having. And then once we have that criterion, the engineers can implement it. There's nothing sort of deep going on there.

It's like with every new technology. You know, the first day that a steam locomotive was demonstrated to the public, it killed someone, it killed an MP, actually. So there's no such thing as a completely safe technology. So driverless cars will no doubt kill people. And there'll be an argument that, “Oh, yeah, okay, it killed somebody, but it's 100 times safer than human drivers.”

And then the opposition will say, “Yeah, well, maybe it's safer in terms of numbers, but it killed this person in a particularly horrible way, which no human driver would ever do. So we don't want that.” And I think also that's a reasonable position to take in some situations.

PINKER: Also, I think there's a question of whether safety is going to consist of some additional technology bolted onto the system – say an airbag in a car that's just there for safety, versus a lot of safety is just inherent in the design of a car. That is, you didn't put brakes in a car and a steering wheel as a safety measure so we're not going to run into walls. That's what a car means. It means: do what a human wants it to do. Or say, a bicycle tyre. You don't have, like, one set of engineers who have a bicycle tyre that holds air and then another one that prevents it from having a blowout, come falling off the rim and therefore injuring the rider. It's part of the very definition of what a bicycle tyre is for, that it not blow out and injure the rider.

Now, in some cases, maybe you do need an add on, like the airbag, but I think the vast majority of it just goes into the definition of any engineered system as something that is designed to satisfy human needs.

DEUTSCH: I agree. Totally agree.

WALKER: Steve, I've heard you hose down concerns about AI-caused existential risk by arguing that it's not plausible that we'll be both smart enough to create a superintelligence but stupid enough to unleash an unaligned superintelligence on the world, and we can always just turn it off if it is malevolent. But isn't the problem that we need to be worried about the worst or most incompetent human actors, not the modal actor? And that's compounded by the game theory dynamics of a race to the bottom where if you cut corners on safety, you'll get to AGI more quickly?

PINKER: Well, I think that with, first of all, the more sophisticated a system is, the larger the network of people are required in order to bring it into existence and the more they'll therefore fall under the ordinary constraints and demands of any company, of any institution. That is, the teenager in his basement is unlikely to accomplish something that will defeat all of the tech companies and government put together. There is, I think, an issue about perhaps malevolent actors, someone who, say, uses AI to engineer a supervirus. And there is the question of whether the people with the white hats are going to outsmart the people with the black hats – that is, the malevolent actors – as with other technologies, such as, say, nuclear weapons – the fear of a suitcase nuclear bomb devised by some malevolent actors in their garage. I think we don't know the answer.

But among the world's problems, the doomsday scenario of, say, the AI that is programmed to eliminate cancer and does it by exterminating all of humanity, because that's one way of eliminating cancer, for many reasons, that does not keep me up at night. I think we have more pressing problems than that. Or that turns us all into paperclips, if it's been programmed to maximise the number of paperclips because we're raw material for making paperclips. I think that kind of Sci-Fi scenario is just preposterous for many reasons, and that probably the real issues of AI safety will become apparent as we develop particular systems and particular applications, and we see the harms that they do, many of which probably can't be anticipated until they're actually built, as with other technologies.

DEUTSCH: Again, I totally agree with that, so long as we're still talking about AI – and I have to keep stressing that I think we're going to be just talking about AI and not AGI for a very long time yet, I would guess, because I see no sign of AGI on the horizon.

The thing we're disagreeing about in regard to AGI is kind of a purely theoretical issue at the moment that has no practical consequences for hiring people for safety or that kind of thing.

[47:08] WALKER: To somewhat segue out of the AI topic. So, Steve, you've written a book called Rationality, and David, you're writing a book called Irrationality. Steve, do you think it makes sense to apply subjective probabilities to single instances? For example, the rationalist community in Berkeley often likes to talk about “what's your p(doom)”? That is, your subjective probability that AI will cause human extinction. Is that a legitimate use of subjective probabilities?

PINKER: Well, certainly one that is not intuitive. And a lot of the classical demonstrations of human irrationality that we associate with, for example, Daniel Kahneman and Amos Tversky, a number of them hinge on asking people a question which they really have trouble making sense of, such as, “What is the probability that this particular person has cancer?” That's a way of assigning a number to a subjective feeling which I do think can be useful.

Whether there's any basis for assigning any such number in the case of artificial intelligence killing us all is another question. But the more generic question: could rational thinkers try to put a number between zero and one on their degree of confidence in a proposition? However unnatural that is, I don't think it's an unreasonable thing to do, although it may be unreasonable in cases where we have spectacular ignorance and it's just in effect picking numbers at random.

WALKER: David, I don't know if you want to react to that?

DEUTSCH: Well, I'm sure we disagree about where to draw the line between reasonable uses of the concept of probability and unreasonable uses.

I probably think that… Hah, I say “probably”.

PINKER: Aha!

DEUTSCH: I expect that I would call many more uses irrational – the uses of probability calculus – than Steve would.

We have subjective expectations, and they come in various strengths. And I think that trying to quantify them with a number doesn't really do anything. It's more like saying, “I'm sure.” And then somebody says, “Are you very sure?” And you say, “Well, I'm very sure.” But you can't compare. There's no intersubjective comparison of utilities that you could appeal to quantify that.

We were just talking about AI doom. That's a very good example. Because if you ask somebody, “What's your subjective probability for AI doom?” Well, if they say zero or one, then they're already violating the tenets of Bayesian epistemology, because zero means that nothing could possibly persuade you that doom is going to happen, and one means nothing could possibly persuade you that it isn't going to happen. (Sorry, vice versa.)

But if you say anything other than zero or one, then your interlocutor has already won the argument, because even if you said “one in a million”, they'll say, “Well, one in a million is much too high probability for the end of civilisation, the end of the human race. So you've got to do everything we say now to avoid that at all costs. And the cost is irrelevant because the disutility of the world civilisation ending is infinite. The utility is infinitely negative.” And this argument has all been about nothing, because you're arguing about the content of the other person's brain, which actually has nothing to do with the real probability, which is unknowable, of a physical event that's going to be subject to unimaginably vast numbers of unknown forces in the future.

So, much better to talk about a thing like that by talking about substance like we just have been. We're talking about what will happen if somebody makes a computer that does so and so – yes, that's a reasonable thing to talk about.

Talking about what the probabilities in somebody's mind are is irrelevant. And it's always irrelevant, unless you're talking about an actual random physical process, like the process that makes the patient come into this particular doctor's surgery rather than that particular doctor's surgery, unless that isn't random – if you're a doctor and you live in an area that has a lot of Brazilian immigrants in it, then you might think that one of them having the Zika virus is more likely, and that's a meaningful judgement.

But when we're talking about things that are facts, it's just that we don't know what they are, then talking about probability doesn't make sense in my view.

PINKER: I guess they'd be a little more charitable to it, although agreeing with almost everything that you're saying. But certainly in realms where people are willing to make a bet – now, of course, maybe those are cases where we've got inherently probabilistic devices like roulette wheels – but we now do have prediction markets for elections. I've been following one on what is the price of a $1 gamble that the president of Harvard will be forced to resign by the end of the year. And I've been tracking as it goes up, and it's certainly meaningful. It responds to events that would have causal consequences of which we're not certain, but which I think we can meaningfully differentiate in terms of how likely they are.

To the extent that we would have skin in the game, we put money on them, and over a large number of those bets, we would make a profit or have a loss, depending on how well our subjective credences are calibrated to the structure of the world.

And in fact, there is a movement – and David, maybe you think this is nonsense – in social science, in political forecasting, encouraging people to bet on their expectations partly as a way, as a bit of cognitive hygiene, so that people resist the temptation to tell a good story to titillate their audience, or to attract attention, but are really, if they have skin in the game, they're going to be much more sober and much more motivated to consider all of the circumstances, and also to avoid well known traps, such as basing expectation on vividness of imagery, on ability to recall similar anecdotes, not taking into account basic laws of probability, such as something is less likely to happen over a span of ten years than over a span of one year.

And we know from the cognitive psychology research that people often flout very basic laws of probability. And there's a kind of discipline in expressing your credence as a number, as a kind of cognitive hygiene so you don't fall into these traps.

DEUTSCH: Yeah, I think I agree, but I would phrase all that very differently, in terms of knowledge.

So I think prediction markets are a way of making money out of knowledge that you have. Supposing I think that, as I once did, that everyone thought that Apple computer was going to fold and go bankrupt, and I thought that I know something that most people don't know, and so I bought Apple shares. And so the share market is also a kind of prediction market. Prediction markets generalise that. And it's basically a way that people who think that they know something that the other participants don't can make money out of that knowledge if they're right. And if they're wrong, then they lose money. And so it's not about their subjective feelings at all. For example, you might be terrified of a certain bet, but then decide, well, actually, I know this and they don't. And so it's worth my betting that it will happen.

I'm sceptical that it will produce mental hygiene, because ordinary betting on roulette and horse races and so on doesn't seem to produce mental hygiene. People do things that are probabilistically likely to lose their money or even to lose all their money, and they still cling to the subjective expectations that they had at the beginning.

PINKER: The moment they step foot at the casino, they're on a path to losing money.

DEUTSCH: Well, by the way, I wouldn't say the casinos are inherently irrational because there are many reasons for betting other than expecting to make money.

PINKER: Because there’s the fun, sure. You pay for the suspense and the resolution.

DEUTSCH: Yes, exactly.

PINKER: But in the case of, say, forecasting and the work by Philip Tedlock and others have shown that the pundits and the op-ed writers who do make predictions are regularly outperformed by the nerds who consciously assign numbers to their degree of credence and increment or decrement them, as you say, based on knowledge.

And often his knowledge, it's not even secret knowledge, but it's knowledge that they bother to look up that no one else does, such as with, say, a terrorist attack, they might at least start off with a prior based on the number of terrorist attacks that have taken place in the previous year or previous five years, and then bump up or down that number according to new information, new knowledge, exactly as you suggest. But it's still very different than what your typical op-ed writer for The Guardian might do.

DEUTSCH: Yes, I think, as you might guess, I would put my money on explanatory knowledge rather than extrapolating trends. But extrapolating trends is also a kind of explanatory knowledge, at least in some cases.

PINKER: But there is in general, in Tetlock's research – I don't know if this would mean by an explanatory prediction – but the people who have big ideas, who have identifiable ideologies, do way worse than the nerds that simply kind of hoover up every scrap of data they can and, without narratives or deep explanations, simply to try to make their best guess.

DEUTSCH: The people who have actual deep explanations don't write financial stuff in The Guardian. So whenever you see a pundit saying… Whether it's an explanatory theory or an extrapolation or what, you've always got to say, as the saying goes, “If you're so smart, why ain't you rich?”

PINKER: Right.

DEUTSCH: And if they are rich: “Why are you writing op-eds for The Guardian?” So that's a selection criterion that's going to select for bad participants or failed participants in prediction markets. The ones who are succeeding are making money. And as I said, prediction markets are like the stock exchange, except generalised, and they're a very good thing. And they transfer money from people who don't know things but think they do to people who do know things and think they do.

PINKER: Yes. I mean, the added feature of the stock market is that the information is so widely available so quickly that it is extraordinarily rare for someone to actually have knowledge that others don't and that isn't already or very quickly priced into the market. But that does not contradict your point, but just makes it in this particular application, which is why most people on average...

DEUTSCH: Yes, although some interventions in the market are like speculations about the fluctuations, but other things are longer term things where you like with Apple computer, you think, well, that's not going to fold; if it doesn't fold, it's going to succeed, and if it succeeds, its share price will go up.

But there's also feedback onto the companies as well. So that's a thing that doesn't exist really in the prediction markets.

WALKER: I'll jump in. I want to move us to a different topic. So I want to explore potential limits to David's concept of universal explainers. So Steve, in The Language Instinct, you wrote about how children get pretty good at language around three years of age – they go through the “grammar explosion” over a period of a few months. Firstly, what's going on in their minds before this age?

PINKER: Sorry, before their linguistic ability explodes?

WALKER: Right, yeah. Before, say, the age of three.

PINKER: Well, I think research on cognitive development shows that children do have some core understanding of basic ontological categories of the world. This is research done by my colleague Elizabeth Spelke and my former colleague Susan Carey and others, that kids seem to have a concept of an agent, of an object, of a living thing. And I think that's a prerequisite to learning language in a human manner, that unlike, say, the large language models such as GPT, which are just fed massive amounts of text and extract statistical patterns out of them, children are at work trying to figure out why the people around them are making the noises they are, and they correlate some understanding of a likely intention of a speaker with the signals coming out of their mouth.

It's not pure cryptography over the signals themselves, there's additional information carried by the context of parental speech that kids make use of. Basically, they know that language is more like a transducer than just a pattern signal. That is, sentences have meaning. People say them for a purpose – that is, they're trying to give evidence of their mental states, they’re trying to persuade, they're trying to order, they're trying to question.

Kids have enough wherewithal to know that other people have these intentions and that when they use language, it's language about things, and that is their way into language. Which is why the child only needs three years to speak and ChatGPT and GPT-4 would need an equivalent of 30,000 years.

So children don't have 30,000 years, and they don't need 30,000 years, because they're not just doing pure cryptography on the statistical patterns in the language signal.

DEUTSCH: Yes, they're forming explanations.

PINKER: They're forming explanations, exactly.

WALKER: And are they forming explanations from birth?

DEUTSCH: Don't ask me.

PINKER: Yeah, pretty close. The studies are hard. The younger the child, the harder it is to get them to pay attention long enough to kind of see what's on their mind. But certainly by three months, we know that they are tracking objects, they are paying attention to people. Certainly even newborns try to lock onto faces, are receptive to human voices, including the voice of their own mother, which they probably began to process in utero.

WALKER: Okay, so let me explore potential limits to universal explainers from another direction. So, David, the so-called First Law of behavioural genetics is that “every trait is heritable”. And that notably includes IQ, but it also extends to things like political attitudes. Does the heritability of behavioural traits impose some kind of constraint on your concept of people as universal explainers?

DEUTSCH: It would if it was true.

So the debate about heritabilities… First of all, heritability means two different things. One is that you're likely to have the same traits as your parents and people you're genetically related to, and that these similarities follow the rules of Mendelian genetics and that kind of thing. So that's one meaning of heritability. But in that meaning, like, where you live, is heritable.

Another meaning is that the behaviour in question is controlled by genes in the same way that eye colour is controlled by genes. That the gene produces a protein which interacts with other proteins and other chemicals and a long chain of cause and effect, and eventually ends up with you doing a certain thing, like hitting someone in the face in the pub. And if you never go to pubs, then this behaviour is never activated. But the propensity to engage in that behaviour, in that situation is still there.

So one extreme says that all behaviour is controlled in that way. And another extreme says that no behaviour is controlled in that way, that it’s all social construction, it's actually all fed into you by your culture, by your parents, by your peers, and so on.

Now, not only do I think that neither of those is true, but I think that the usual way out of this conflict, by saying, “Actually it's an intimate causal relationship, interplay, between the genetic and the environmental influences, and we can't necessarily untangle it, but in some cases, we can say that genes are very important in this in this thing, and in the other cases, we can say they're relatively unimportant in this trait…” I would say that whole framing is wrong.

It misses the main determinant of human behaviour, which is creativity. And creativity is something that doesn't necessarily come from anywhere. It might do. You might have a creativity that is conditioned by your parents or by your culture or by your genes. For example, if you have very good visual-spatial hardware in your brain – I don't know if there is such a thing, but suppose there were – then you might find playing basketball rewarding, because you can get the satisfaction of seeing your intentions fulfilled – and if you're also very tall, and so on… You can see how the genetic factors might affect your creativity.

But it can also happen the other way around. So if someone is shorter than normal, they might still become a great tennis player. So Michael Chang was I think five foot nine, and the average tennis player was at the time was six foot three or something. And Michael Chang nevertheless got into the top, whatever it was, and nearly won Wimbledon. And I can imagine telling a story about that. I don't know, actually, why Michael Chang became a tennis player. But I can imagine a story where his innate suitability for tennis, that is his height, but also perhaps his coordination, all the other things that might be inborn, that they might be less than usual, and that therefore, he might have spent more of his creativity during his childhood in compensating for that. And he compensated for it so well that, in fact, he became a better tennis player than those who were genetically suitable for it.

And in a certain society – if I can just add the social thing as well – it's also plausible that in a certain society, that would happen quite often, because in Gordonstoun School, where Prince Charles went to school, they had this appalling custom that if a boy (it was only boys in those days) didn't like a particular activity, then they'd be forced to do it more. And if that form of instruction was effective, you'd end up with people emerging from the school who were better at the things that they were less genetically inclined to do and worse at the things they were more genetically inclined to do.

Okay, bottom line: I think that creativity is hugely undervalued as a factor in the outcome of people's behaviour. And although creativity can be affected in the ways I've said, sometimes perversely, by genes and by culture, that doesn't mean that it's not all due to creativity, because the people who were good at, say, tennis, will turn out to be the ones that have devoted a lot of thought to tennis. If that was due to them being genetically suitable, then so be it. But if it was due to them being genetically unsuitable, but they still devoted the creativity, then they would be good at tennis – of course, not sumo wrestling, but I chose a sport that's rather cerebral.

PINKER: Let me put it somewhat differently. Heritability, as it's used in the field of behavioural genetics, is a measure of individual differences. So it is not even meaningful to talk about the heritability of the intelligence of one person. It is a measure of the extent to which the differences in a sample of people – and it's always relative to that sample – can be attributed to the genetic differences among them.

It can be measured in four ways, each of which takes into account the fact that people who are related also tend to grow up in similar environments. And so one of the methods is you compare identical and fraternal twins. Identical twins share all their genes and their environment. Fraternal twins share half their genes and their environment. And so by seeing if identical twins are more similar than fraternal twins, that's a way of teasing apart, to a first approximation, heredity and environment.

Another one is to look at twins separated at birth, who share their genes but not their environment. And to the extent that they are correlated, that suggests that genes play a role.

The third way is to compare the similarity, say, of adoptive siblings and biological siblings. Adoptive siblings share their environment, but not their genes. Biological siblings share both.

And now, more recently, there's a fourth method of actually looking at the genome itself and genome-wide association studies to see if the pattern of variable genes is statistically correlated with certain traits, like intelligence, like creativity if we had a good measure of creativity.

And so you can ask, to what extent is the difference between two people attributable to their genetic differences? Although those techniques don't tell you anything about the intelligence of Mike or the intelligence of Lisa herself.

Now, heritability is always less than 1. It is surprisingly much greater than 0, pretty much for every human psychological trait that we know how to measure. And that isn't obviously true a priori. You wouldn't necessarily expect that, say, if you have identical twins separated at birth, growing up in very different environments. There are cases like that, such as one twin who grew up in a Jewish family in Trinidad, another twin who grew up in a Nazi family in Germany, and then when they met in the lab, they were wearing the same clothes, had the same habits and quirks, and indeed, political orientation – not perfectly, so we're talking about statistical resemblances here.

But before you knew how the studies came out, I think most of us wouldn't necessarily have predicted that political liberal-to-conservative beliefs or libertarian-to-communitarian beliefs would at all be correlated between twins separated at birth, for example, or uncorrelated in adoptive siblings growing up in the same family.

So I think that is a significant finding. I don't think it can be blown off. Although, again, it's true that it does not speak to David's question of how a particular behaviour, including novel creative behaviour, was produced by that person at that time. That's just not what heritability is about.

DEUTSCH: Yes, but you can say whether a gene influences in a population, whether similarities in genes influence a behaviour, but unless you have an explanation, you don't know what that influence consists of. It might operate via, for example, the person's appearance, so that people who are good looking are treated differently from people who aren't good looking. And that would be true even for identical twins reared separately.

And there's also the fact that when people grow up, they sometimes change their political views. So the stereotype is that you're left wing when you're young and in your twenties, and then when you get into your forties and fifties and older, you become more and more right wing.

PINKER: There's the saying attributed to many people that anyone who is not a socialist when they're young has no heart, and anyone who is a socialist when they're old has no head. I've tried to track that down and it's been attributed to many quotesters over the years. It's not completely true, by the way. There's something of a life cycle effect in political attitudes, but there's a much bigger cohort effect. So people tend to carry their political beliefs with them as they age.

DEUTSCH: Well they tend to in our culture. So there are other cultures in which they absolutely always do, because only one political orientation is tolerated. In a different society, one that perhaps doesn't exist yet which is more liberal than ours, it might be that people change their political orientation every five years.

PINKER: Neither of us can determine that from our armchairs. I mean, that is an empirical question that you'd have to test.

DEUTSCH: Well, you can't test whether it could happen.

PINKER: Well, that is true. You could test whether it does happen.

DEUTSCH: Yes, exactly.

PINKER: But again, by the way, it is within the field of behavioural genetics, it's well recognised that heritability per se is a correlational statistic. So if a trait is heritable, it doesn't automatically mean that it is via the effects of the genes on brain operation per se. You're right that it could be via the body, could be via the appearance, it could be indirectly via a personality trait or cognitive style that inclines someone towards picking some environments over others, so that if you are smart, you're more likely to spend time in libraries and in school, you're going to stay in school longer; if you're not so smart, you won't.

And so, it's not that the environment doesn't matter, but the environment in those cases is actually downstream from genetic differences – sometimes called a gene environment correlation – where your genetic endowment predisposes you to spend more time in one environment than in another.

Which is also one of the possible explanations for another surprising finding, that some traits, such as intelligence, tend to increase in heritability as you get older and effects of familial environment tend to decrease. Contrary to the mental image one might have that as the twig is bent, so grows the branch, that as we live our lives, we may differentiate.

As we live our lives, we tend to be more predictable based on our genetic endowment, perhaps because there are more opportunities for us to place ourselves in the environment that make the best use of our heritable talents.

Whereas when you're a kid, you’ve got to spend a lot of time in whatever environment your parents place you in. As you get older, you get to choose your environment. So again, the genetic endowment is not an alternative to an environmental influence. But in many cases, it may be that the environmental influence is actually an effect of a genetic difference.

DEUTSCH: Yes, like in the examples we just said. But I just want to carry on like a broken record and say that something directly caused by genes doesn't mean that the rest is caused by environment. It could be that the rest is caused by creativity, by something that's unique to the person. And it could be that the proportion of behaviours that is unique to the person is itself determined by the genes and by the environment. So in one culture, people are allowed to be more creative in their lives. And William Godwin said something like, I can't say the quote exactly, but it was something like, “Two boys walk side by side through the same forest; they are not having the same experience.”

And one reason is that one of them's on the left and one of them is on the right, and they're seeing different bits of forest, and one of them may see a thing that interests him and so on. But it's also because, internally, they are walking through a different environment. One of them is walking through his problems, the other one is walking through his problems.

If you could in principle account for some behaviour, perhaps statistically, entirely in terms of genes and environment, it would mean that the environment was destroying creativity.

PINKER: Let me actually cite some data that may be relevant to this, because they are right out of behavioural genetics. Behavioural geneticists sometimes distinguish between the shared or familial environment and this rather ill-defined entity called the nonshared or unique environment. I think it's actually a misnomer, but it refers to the following empirical phenomenon. So each of the techniques that I explained earlier – let's just take, say, identical twins, say, separated at birth, compare them to identical twins brought up together. Now, the fact that correlation between identical twins separated at birth is much greater than zero suggests that genes matter; it's not all the environment, in terms of this variation.

However, identical twins reared together do not correlate 1.0 or even 0.95. In many traits, they correlate around 0.5. Now, it's interesting that's greater than a 0.

It's also interesting that it's less than 1.0. And it means that of the things that affect, say, personality – David, you might want to attribute this to creativity – but they are neither genetic, nor are they products of the aspects of the environment that are obvious, that are easy to measure, such as whether you have older siblings, whether you're an only child, whether there are books in the home, whether there are guns in the home, whether there are TVs in the home, because those all are same in twins reared together. Nonetheless, they are not indistinguishable.

Now, one way just of characterising this: well, maybe there is a causal effect of some minute, infinitesimal difference, like if you sleep in the top bunk bed or the bottom bunk bed, or you walk on the left or you walk on the right. Another one is that there could be effects that are, for all intents and purposes, random, that as the brain develops, for example, the genome couldn't possibly specify the wiring diagram down to the last synapse. It makes us human by keeping variation and development within certain functional boundaries, but within those boundaries there's a lot of sheer randomness.

And perhaps it could be – and, David, you'll tell me if this harmonises with your conception – creativity in the sense that we have cognitive processes that are open-ended, combinatorial, where it's conceivable that small differences in the initial state of thinking through a problem could diverge as we start to think about them more and more. So they may even have started out essentially random, but end up in very different places.

Now, would that count as what you're describing as creativity? Because ultimately creativity itself has to be… It's not a miracle. It ultimately has to come from some mechanism in the brain, and then you could ask the question: why are the brains of two identical twins, specified by the same genome, why would their creative processes, as they unfold, take them in different directions?

DEUTSCH: Yes. So that very much captures what I wanted to say, although I must add that it's always a bit misleading to talk about high-level things, especially in knowledge creation, in terms of the microscopic substrate. Because if you say the reason why something or other happened, the reason why Napoleon lost the battle of Waterloo, was ultimately because an atom went left rather than right several years before. Even if that's true, it doesn't explain what happened. It's only possible to explain the outcome of the battle of Waterloo by talking about things like strategy, tactics, guns, numbers of soldiers, political imperatives, all that kind of thing.

And it's the same with a child growing up in a home. It's not helpful to say that the reason that the two identical twins have a different outcome in such and such a way is because there was a random difference in their brains, even though it was the same DNA program, and that was eventually amplified into different opinions.

It's much more explanatory, much more matches the reality, much better to say one of them decided that his autonomy was more important to him than praise, and the other one didn't. Perhaps that's even too big a thing to say, so even a smaller thing would be legitimate. But I think as small as a molecule doesn't tell us anything.

PINKER: Right. By the way, there’s much that I agree with. And it's even an answer to Joe's very first question of what do I appreciate in David's work? Because one thing that captivated me immediately is that he, like I, locate explanations of human behaviour at the level of knowledge, thought, cognition, not the level of neurophysiology. That's why I'm not a neuroscientist, why I'm a cognitive scientist, because I do think the perspicuous level of explaining human thought is at the level of knowledge, information, inference, rather than at the level of neural circuits.

The problem in the case say of the twins, though, is that because they are in, as best we can tell, the same environment, because they do have the same or very similar brains, although again, I think they are different because of random processes during brain development, together with possibly somatic mutations that each one accumulated after conception, so they are different. But it's going to be very difficult to find a cause of the level of explanation that we agree is most perspicuous, given that their past experience is, as best we can tell, indistinguishable.

Now, it could be that we could trace it. If we followed them every moment of their life with a body cam, we could identify something that, predictably, for any person on the planet, given the exposure to that particular perceptual experience, would send them off in a particular direction.

Although it also could be that creativity, which we're both interested in, has some kind of – I don't know if you'd want to call it a chaotic component or undecidable component – but it may be that it's in the nature of creativity that given identical inputs, it may not end up at the same place.

DEUTSCH: I agree with that.

WALKER: I'm going to jump in there. I do want to finish on the topic of progress, so I have three questions, and I'll uncharacteristically play the role of the pessimist here. So you two can gang up on me if you like. But the first question: can either of you name any cases in which you would think it reasonable or appropriate to halt or slow the development of a new technology? Steve?

PINKER: Sure, it depends on the technology, and would depend on the argument, but I can imagine, say, that gain of function research in virulent viruses may have costs that outweigh the benefit and the knowledge and there may be many other examples. I mean, it would have to be examined on a case by case basis.

WALKER: David?

DEUTSCH: So there's a difference between halting the research and making the research secret. So obviously, the Manhattan project had to be kept secret, otherwise it wouldn't work, and they were trying to make a weapon, and the weapon wouldn't be effective if everybody had it.

But can I think of an example where it's a good idea to halt the research altogether? Yes. I can't think of an example at the moment. Maybe this gain of function thing is an example where, under some circumstances, there would be an argument for a moratorium.

But the trouble with moratoria is that not everybody will obey it, and the bad actors are definitely not going to obey it if the result would be a military advantage to them.

PINKER: You could put it in a different sense of where it isn't a question of putting a moratorium, but not making the positive decision to invest vast amounts of brain power and resources into a problem where we should just desist, and it won't happen unless you have the equivalent of a Manhattan project.

I think we can ask the question – I don't know if it's answerable – but would the atomic bomb have been invented if it were not for the special circumstances of a war against the Nazis and an expectation the Nazis themselves were working on an atomic weapon? That is, does technology necessarily have kind of a momentum of its own so that it was inevitable that if we had 100 civilisations in 100 planets, all of them would develop nuclear weapons at this stage of development?

Or was it just really bad luck, and would we have been better off… Obviously, we'd better off if there were no Nazis. But if there were no Nazis, would we inevitably have developed them, or would we have been, since we would have better off not.

DEUTSCH: The Japanese could have done it as well if they'd put enough resources into it. They had the scientific knowledge and they had already made biological weapons of mass destruction. They never used them on America, but they did use them on China. So there were bad actors. But all those things – so nuclear weapons and biological weapons – they required the resources of some quite rich states at that time in the 1940s.

PINKER: So if we replayed history, is there a history in which we would have had all of the technological progress that we've now enjoyed, but it just never occurred to anyone to set up at fantastic expense a Manhattan project? We just were better off without nuclear weapons, so why invest all of that brain power and resources to invent one, unless you were in a specific circumstance having reason to believe that the Nazis or Imperial Japan was doing it.

DEUTSCH: Although it's very unlikely that they would have been invented in 1944/45, by the time we get to 2023, I think that the secret that this is possible would have got out by now, because we know that we knew even then that the amount of energy available in uranium is enormous. And the Germans were, by the way, thinking of making a dirty bomb with it and something less than a nuclear weapon. I think by now it would have been known, and there are countries that have developed nuclear weapons already, like North Korea, who I think by now would have them – and they'd be very much more dangerous if the West didn't have them as well.

PINKER: I wonder. I think what we have to do is think of the counterfactual of other weapons where the technology could exist if countries devoted a comparable amount of resources into developing them. Is it possible to generate tsunamis by planting explosives in deep ocean faults to trigger earthquakes as a kind of weapon, or to control the weather, or to cause weather catastrophes by seeding clouds? If we had a Manhattan project for those, could there have been a development of those technologies where once we have them, we say, well, it's inevitable that we would have them. But in fact, it did depend on particular decisions to exploit that option, which is not trivial for any society to do, but it did require the positive commitment of resources and a national effort.

DEUTSCH: Yeah, I can imagine that there are universes in which nuclear weapons weren't developed, but, say, biological weapons were developed, whereabout none of them.

PINKER: What about none of them? Just let's be optimistic for a second in terms of our thought experiment. Could there be one where we had microchips and vaccines and moonshots, but no weapons of mass destruction?

DEUTSCH: Well, I don't think there can be many of those, because we haven't solved the problem of how to spread the Enlightenment to bad actors. We will have to eventually, otherwise we're doomed.

I think the reason that a wide variety of weapons of mass destruction, civilisation-ending weapons, that kind of thing, have not been developed is that the nuclear weapons are in the hands of Enlightenment countries. And so it's pointless to try to attack America with biological weapons, because even if they don't have biological weapons, they will reply with nuclear weapons. So once there are weapons of mass destruction in the hands of the good guys, it gives us decades of leeway in which to try to prevent, try to suppress, the existence of bad actors, state-level bad actors.

But the fact that it's expensive, that decreases with time. For a country to make nuclear weapons now requires a much smaller proportion of its national wealth than it did in 1944. And that will increase. That effect will increase in the future.

PINKER: But is that true to the extent that some country beforehand has made that investment? So the knowledge is there, and that if they hadn't, then that kind of Moore's law would not apply.

DEUTSCH: It would hold them up by a finite amount, by a fixed and finite amount whose cost would go down with time.

WALKER: Okay, penultimate question. So there's been a well observed slowdown in scientific and technological progress since about 1970. And there are two broad categories of explanations for this. One is that we have somehow picked all of the low-hanging fruits, and so ideas are getting harder to find. And the second category relies on more cultural explanations – like, for example, maybe academia has become too bureaucratic, maybe society more broadly has become too risk averse, too safety-focused. Given the magnitude of the slowdown, doesn't it have to be the case that ideas are getting harder to find? Because it seems implausible that a slowdown this large could be purely or mostly driven by the cultural explanations. David, I think I kind of know your response to this question, although I'm curious to hear your answer. So, Steve, I might start with you.

PINKER: I suspect there's some of each that almost by definition, unless every scientific problem is equally hard, which seems unlikely, we're going to solve the easier ones before the harder ones, and the harder ones are going to take longer to solve. So we do go for the low-hanging fruit sooner.

Of course, it also depends on how you count scientific problems and solutions. You know, I think of an awful lot of breakthroughs since the 1970s. I don't know how well you could quantify the rate. But then I think one could perhaps point to society-wide commitments that seem to be getting diluted. Certainly in the United States, there are many decisions that I think will have the effect of slowing down progress. The main one being the retreat from meritocracy, the fact that we're seeing gifted programs, specialised science and math schools, educational commitments toward scientific and mathematical excellence being watered down, sometimes on the basis of rather dubious worries about equity across racial groups, as superseding the benefits of going all ahead on nurturing scientific talent wherever it's. So I think it almost has to be some of each.

WALKER: David?

DEUTSCH: So I disagree, as you predicted. By the way, you said you were only going to be pessimistic on one question. Now you've been pessimistic on a second question.

WALKER: No, I had three pessimistic questions. So there's one more!

DEUTSCH: I don't think that there is less low hanging fruit now than there was 100 years ago, because when there's a fundamental discovery, it not only picks a lot of what turns out to be, with hindsight, low-hanging fruit – although it didn't seem like that in advance – but it also creates new fruit trees, if I can continue this metaphor.

So there are new problems. For example, my own field, quantum computers. The field of quantum computers couldn't exist before there was quantum theory and computers. They both had to exist. There's no such thing as it having been low-hanging fruit all along in 1850 as well. It wasn't. It was a thing that emerged, a new problem creating new low-hanging fruit.

But then, if I can continue my historical speculation about this as well, to make a different point: quantum computers weren't, in fact, invented in the 1930s or 40s or 50s, when they had a deep knowledge of quantum theory and of computation. And both those fields were regarded by their respective sciences as important and had a lot of people working on them, although a lot in those days was a lot less than what a lot counts as today.